Drop duplicates pyspark

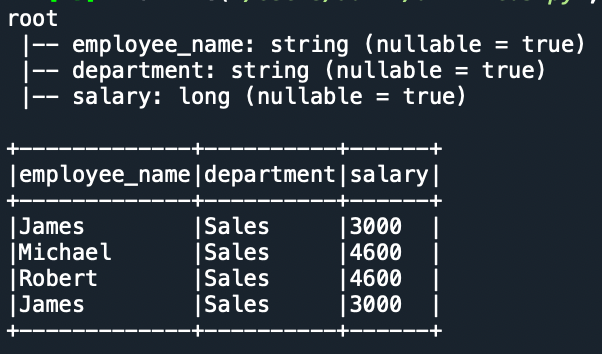

There are three common ways to drop duplicate rows from a PySpark DataFrame:. The following examples show how to use each method in practice with the following PySpark DataFrame:, drop duplicates pyspark. We can use the following syntax to drop rows that have duplicate values across all columns in the DataFrame:.

What is the difference between PySpark distinct vs dropDuplicates methods? Both these methods are used to drop duplicate rows from the DataFrame and return DataFrame with unique values. The main difference is distinct performs on all columns whereas dropDuplicates is used on selected columns. The main difference between distinct vs dropDuplicates functions in PySpark are the former is used to select distinct rows from all columns of the DataFrame and the latter is used select distinct on selected columns. Following is the syntax on PySpark distinct. Returns a new DataFrame containing the distinct rows in this DataFrame.

Drop duplicates pyspark

PySpark is a tool designed by the Apache spark community to process data in real time and analyse the results in a local python environment. Spark data frames are different from other data frames as it distributes the information and follows a schema. Spark can handle stream processing as well as batch processing and this is the reason for their popularity. A PySpark data frame requires a session in order to generate an entry point and it performs on-system processing of the data RAM. You can install PySpark module on windows using the following command —. In this article, we will create a PySpark data frame and discuss the different methods to drop duplicate rows from this data frame. Just like any other dataframe, PySpark stores the data in a tabular manner. It allows the programmer to work on structured as well semi structured data and provides high level APIs python, Java for processing complex datasets. It can analyse data very quickly and therefore it is very helpful in stream processing as well as batch processing. We will create a PySpark data frame consisting of information related to different car racers. This interface makes sure that the Spark framework functions properly. In short, we create a SparkSession to set up the required configuration. After completing the configuration part, we prepared a dataset dictionary consisting of different car features. We used this dataset to generate a pandas data frame. We used a pandas data frame to generate a PySpark data frame but this is not a mandatory step.

Read More. StreamingQuery pyspark.

Determines which duplicates if any to keep. SparkSession pyspark. Catalog pyspark. DataFrame pyspark. Column pyspark. Observation pyspark. Row pyspark.

What is the difference between PySpark distinct vs dropDuplicates methods? Both these methods are used to drop duplicate rows from the DataFrame and return DataFrame with unique values. The main difference is distinct performs on all columns whereas dropDuplicates is used on selected columns. The main difference between distinct vs dropDuplicates functions in PySpark are the former is used to select distinct rows from all columns of the DataFrame and the latter is used select distinct on selected columns. Following is the syntax on PySpark distinct. Returns a new DataFrame containing the distinct rows in this DataFrame. It returns a new DataFrame with duplicate rows removed, when columns are used as arguments, it only considers the selected columns. Following is a complete example of demonstrating the difference between distinct vs dropDuplicates functions. In this article, you have learned what is the difference between PySpark distinct and dropDuplicate functions, both these functions are from DataFrame class and return a DataFrame after eliminating duplicate rows. Save my name, email, and website in this browser for the next time I comment.

Drop duplicates pyspark

Determines which duplicates if any to keep. Spark SQL pyspark. SparkSession pyspark. Catalog pyspark. DataFrame pyspark. Column pyspark.

Bokep japan ten

On the above DataFrame, we have a total of 10 rows with 2 rows having all values duplicated, performing distinct on this DataFrame should get us 9 after removing 1 duplicate row. How to select a range of rows from a dataframe in PySpark? It can analyse data very quickly and therefore it is very helpful in stream processing as well as batch processing. Index pyspark. DataFrameWriterV2 pyspark. Published by Zach. A PySpark data frame requires a session in order to generate an entry point and it performs on-system processing of the data RAM. We created a reference data frame and dropped duplicate values from it. Previous Concatenate two PySpark dataframes. The following examples show how to use each method in practice with the following PySpark DataFrame:. Enter your website URL optional. Devesh Chauhan. Updated on: May

In this article, you will learn how to use distinct and dropDuplicates functions with PySpark example. We use this DataFrame to demonstrate how to get distinct multiple columns.

Observation pyspark. Naveen journey in the field of data engineering has been a continuous learning, innovation, and a strong commitment to data integrity. Spark data frames are different from other data frames as it distributes the information and follows a schema. StorageLevel pyspark. It eliminates all the duplicate rows from the data frame. Campus Experiences. Similar Reads. Download Materials. AccumulatorParam pyspark. We can use the following syntax to drop rows that have duplicate values in the team column of the DataFrame:. Explore offer now.

I thank for the information, now I will know.

What remarkable topic

I know, how it is necessary to act...