Ffmpeg threads

It wasn't that long ago that reading, processing, ffmpeg threads, and rendering the contents of a single image took a noticeable amount of time. But both hardware and software techniques have gotten significantly faster.

Opened 10 years ago. Closed 10 years ago. Last modified 9 years ago. Summary of the bug: Ffmpeg ignores the -threads option with libx It is normal for libx to take up more CPU than libx This does not mean that libx is not using multithreading.

Ffmpeg threads

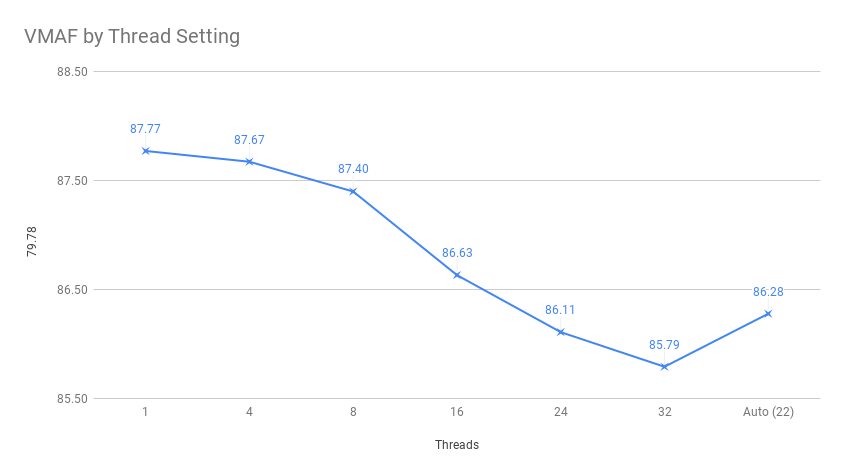

This article details how the FFmpeg threads command impacts performance, overall quality, and transient quality for live and VOD encoding. As we all have learned too many times, there are no simple questions when it comes to encoding. So hundreds of encodes and three days later, here are the questions I will answer. On a multiple-core computer, the threads command controls how many threads FFmpeg consumes. Note that this file is 60p. This varies depending upon the number of cores in your computer. I produced this file on my 8-core HP ZBook notebook and you see the threads value of For a simulated live capture operation, the value was Pretty much the way you would expect it to. In a simulated capture scenario, outputting p video 60 fps using the -re switch to read the incoming file in real time you get the following.

I think that if you're operating at the scale ffmpeg threads Google using a single-threaded ffmpeg will finish your jobs in less time. Stream handling is set via the -codec option addressed to streams within a specific output file, ffmpeg threads.

Anything found on the command line which cannot be interpreted as an option is considered to be an output url. Selecting which streams from which inputs will go into which output is either done automatically or with the -map option see the Stream selection chapter. To refer to input files in options, you must use their indices 0-based. Similarly, streams within a file are referred to by their indices. Also see the Stream specifiers chapter. As a general rule, options are applied to the next specified file.

Anything found on the command line which cannot be interpreted as an option is considered to be an output url. Selecting which streams from which inputs will go into which output is either done automatically or with the -map option see the Stream selection chapter. To refer to input files in options, you must use their indices 0-based. Similarly, streams within a file are referred to by their indices. Also see the Stream specifiers chapter. As a general rule, options are applied to the next specified file.

Ffmpeg threads

Connect and share knowledge within a single location that is structured and easy to search. I tried to run ffmpeg to convert a video MKV to MP4 for online streaming and it does not utilize all 24 cores. An example of the top output is below. I've read similar questions on stackexchange sites but all are left unanswered and are years old. I tried adding the -threads parameter, before and after -i with option 0, 24, 48 and various others but it seems to ignore this input. I'm not scaling the video either.

Chewies for retainers

Its value is a floating-point positive number which represents the maximum duration of media, in seconds, that should be ingested in one second of wallclock time. With -map you can select from which stream the timestamps should be taken. The kernel multiplies your efforts for you. This is how videos uploaded to Facebook or YouTube become available so quickly. Why would I want to wreck that with asking a model to do it for me? The time base is copied to the output encoder from the corresponding input demuxer. That's a good point. Have you tried some sort of refactoring in it? So if I show you an LLM implementing concurrency, will you concede the point? The subtitle stream of C.

The libavcodec library now contains a native VVC Versatile Video Coding decoder, supporting a large subset of the codec's features.

Print complete list of options, including shared and private options for encoders, decoders, demuxers, muxers, filters, etc. Adding on -threads 12 had no effect. In particular, codec options are applied by ffmpeg after the stream selection process and thus do not influence the latter. I used this FFmpeg command for these tests. Hope you're looking for good-faith discussion here. Viewed k times. Simple filtergraphs are configured with the per-stream -filter option with -vf and -af aliases for video and audio respectively. In particular, do not remove the initial start time offset value. As multi-core as Python and Ruby then. It's not "-treads", but "-threads" — Slavik.

I apologise, but, in my opinion, you are not right. I can prove it.

You have hit the mark.

It is the truth.