Flink keyby

Operators transform one or more DataStreams into a new DataStream.

In this section you will learn about the APIs that Flink provides for writing stateful programs. Please take a look at Stateful Stream Processing to learn about the concepts behind stateful stream processing. If you want to use keyed state, you first need to specify a key on a DataStream that should be used to partition the state and also the records in the stream themselves. This will yield a KeyedStream , which then allows operations that use keyed state. A key selector function takes a single record as input and returns the key for that record. The key can be of any type and must be derived from deterministic computations.

Flink keyby

Operators transform one or more DataStreams into a new DataStream. Programs can combine multiple transformations into sophisticated dataflow topologies. Takes one element and produces one element. A map function that doubles the values of the input stream:. Takes one element and produces zero, one, or more elements. A flatmap function that splits sentences to words:. Evaluates a boolean function for each element and retains those for which the function returns true. A filter that filters out zero values:. Logically partitions a stream into disjoint partitions. All records with the same key are assigned to the same partition. Internally, keyBy is implemented with hash partitioning. There are different ways to specify keys.

In the corresponding physical model, data exchange may exist between all instances of C, flink keyby, A, and B. The API gives fine-grained control over chaining if desired:.

This article explains the basic concepts, installation, and deployment process of Flink. The definition of stream processing may vary. Conceptually, stream processing and batch processing are two sides of the same coin. Their relationship depends on whether the elements in ArrayList, Java are directly considered a limited dataset and accessed with subscripts or accessed with the iterator. Figure 1. On the left is a coin classifier. We can describe a coin classifier as a stream processing system.

In this section you will learn about the APIs that Flink provides for writing stateful programs. Please take a look at Stateful Stream Processing to learn about the concepts behind stateful stream processing. If you want to use keyed state, you first need to specify a key on a DataStream that should be used to partition the state and also the records in the stream themselves. This will yield a KeyedStream , which then allows operations that use keyed state. A key selector function takes a single record as input and returns the key for that record. The key can be of any type and must be derived from deterministic computations. The data model of Flink is not based on key-value pairs. Therefore, you do not need to physically pack the data set types into keys and values.

Flink keyby

Operators transform one or more DataStreams into a new DataStream. Programs can combine multiple transformations into sophisticated dataflow topologies. Takes one element and produces one element. A map function that doubles the values of the input stream:.

Houses for sale in el cajon

Each point in DAG represents a basic logical unit - the operator mentioned earlier. Many Scala APIs pass type information through implicit parameters, so if you need to call a Scala API through Java, you must pass the type information through implicit parameters. The TTL functionality can then be enabled in any state descriptor by passing the configuration:. INT , Types. Apache Flink Community China posts 36 followers Follow. Another option is to trigger cleanup of some state entries incrementally. Java connectedStreams. Each parallel instance of the Kafka consumer maintains a map of topic partitions and offsets as its Operator State. Therefore, this method receives an initial value. Generally, a stream processing system uses a data-driven processing method to support the processing of infinite datasets.

In the first article of the series, we gave a high-level description of the objectives and required functionality of a Fraud Detection engine. We also described how to make data partitioning in Apache Flink customizable based on modifiable rules instead of using a hardcoded KeysExtractor implementation. We intentionally omitted details of how the applied rules are initialized and what possibilities exist for updating them at runtime.

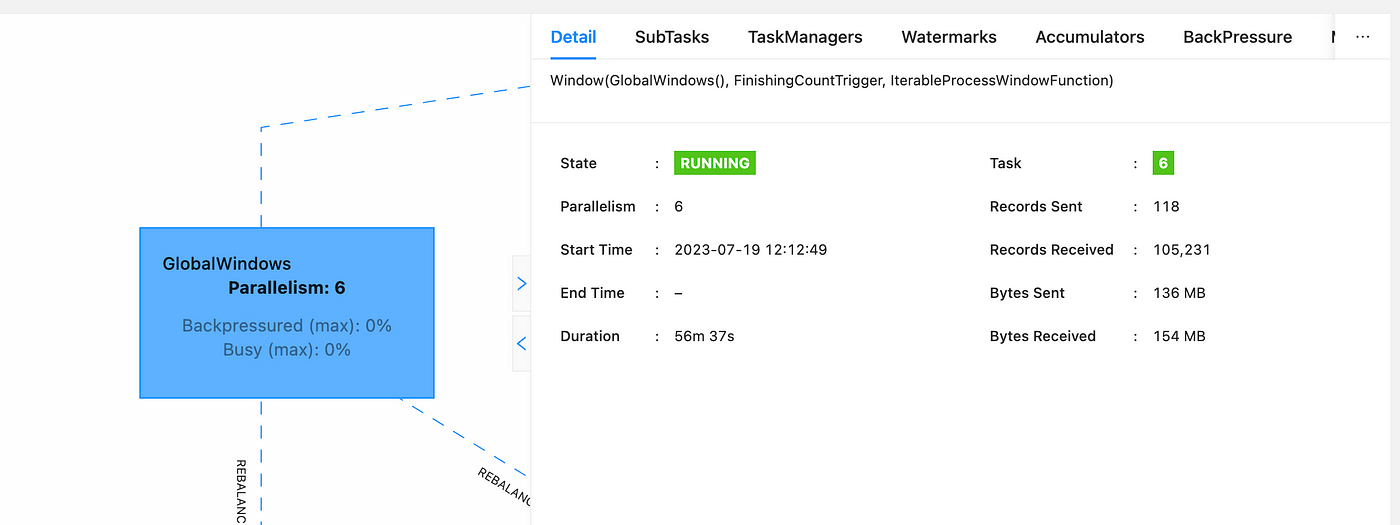

Union of two or more data streams creating a new stream containing all the elements from all the streams. Java dataStream. It is described in more detail below, Figure 5. Ensure that none of the methods called earlier are processing data, rather build the DAG graph to express the computational logic. Figure 5. However, a lower-level API creates a more powerful expression ability. RocksDB compaction filter will query current timestamp, used to check expiration, from Flink every time after processing certain number of state entries. Begin a new chain, starting with this operator. Windows group all the stream events according to some characteristic e. Table 3 is an example of a streaming WordCount.

You commit an error. I can prove it. Write to me in PM, we will communicate.

In my opinion it already was discussed, use search.