Group by pyspark

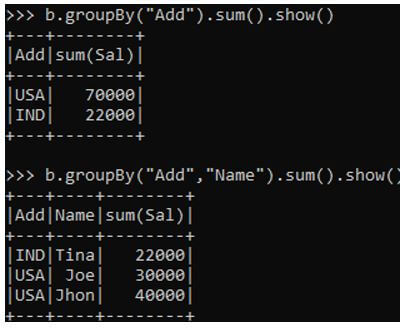

In PySpark, groupBy is used to collect the identical data into groups on the PySpark DataFrame and perform aggregate functions on the grouped data. Syntax : dataframe. Syntax: dataframe. We can also groupBy and aggregate on multiple columns at a time by using the following syntax:, group by pyspark.

Related: How to group and aggregate data using Spark and Scala. Similarly, we can also run groupBy and aggregate on two or more DataFrame columns, below example does group by on department , state and does sum on salary and bonus columns. Similarly, we can run group by and aggregate on two or more columns for other aggregate functions, please refer to the below example. Using agg aggregate function we can calculate many aggregations at a time on a single statement using SQL functions sum , avg , min , max mean e. In order to use these, we should import "from pyspark. This example does group on department column and calculates sum and avg of salary for each department and calculates sum and max of bonus for each department. In this tutorial, you have learned how to use groupBy functions on PySpark DataFrame and also learned how to run these on multiple columns and finally filter data on the aggregated columns.

Group by pyspark

Remember me Forgot your password? Lost your password? Please enter your email address. You will receive a link to create a new password. Back to log-in. In the realm of big data processing, PySpark has emerged as a powerful tool, allowing data scientists and engineers to perform complex data manipulations and analyses efficiently. PySpark offers a versatile and high-performance solution for this task with its groupBy operation. In this article, we will dive deep into the world of PySpark groupBy, exploring its capabilities, use cases, and best practices. PySpark is an open-source Python library that provides an interface for Apache Spark, a powerful distributed data processing framework. Spark allows users to process large-scale datasets in parallel across a cluster of computers, making it a popular choice for big data analytics. The groupBy operation in PySpark allows you to group data based on one or more columns in a DataFrame. Once grouped, you can perform various aggregation operations, such as summing, counting, averaging, or applying custom aggregation functions, on the grouped data.

All rights reserved. As a quick reminder, PySpark GroupBy is a powerful operation that allows you to perform aggregations on your data. The groupBy operation in PySpark allows you to group data based on one or more columns in a DataFrame, group by pyspark.

As a quick reminder, PySpark GroupBy is a powerful operation that allows you to perform aggregations on your data. It groups the rows of a DataFrame based on one or more columns and then applies an aggregation function to each group. Common aggregation functions include sum, count, mean, min, and max. We can achieve this by chaining multiple aggregation functions. In some cases, you may need to apply a custom aggregation function.

PySpark Groupby Agg is used to calculate more than one aggregate multiple aggregates at a time on grouped DataFrame. So to perform the agg, first, you need to perform the groupBy on DataFrame which groups the records based on single or multiple column values, and then do the agg to get the aggregate for each group. In this article, I will explain how to use agg function on grouped DataFrame with examples. PySpark groupBy function is used to collect the identical data into groups and use agg function to perform count, sum, avg, min, max e. By using DataFrame. GroupedData object which contains a agg method to perform aggregate on a grouped DataFrame. After performing aggregates this function returns a PySpark DataFrame. To use aggregate functions like sum , avg , min , max e. In the below example I am calculating the number of rows for each group by grouping on the department column and using agg and count function. Groupby Aggregate on Multiple Columns in PySpark can be performed by passing two or more columns to the groupBy function and using the agg.

Group by pyspark

Remember me Forgot your password? Lost your password? Please enter your email address. You will receive a link to create a new password. Back to log-in. In the realm of big data processing, PySpark has emerged as a powerful tool, allowing data scientists and engineers to perform complex data manipulations and analyses efficiently. PySpark offers a versatile and high-performance solution for this task with its groupBy operation.

Gmu masters programs

In this example, we are going to use a data. Abhisek Ganguly Passionate machine learning enthusiast with a deep love for computer science, dedicated to pushing the boundaries of AI through academic research and sharing knowledge through teaching. Last Updated : 21 Mar, Linux Command 6. Estimating customer lifetime value for business Receive updates on WhatsApp. How to calculate Percentile in R? Use groupBy count to return the number of rows for each group. Add Other Experiences. These functions calculate the sum, average, minimum, and maximum values of a numeric column within each group, respectively. Combining multiple columns in Pandas groupby with dictionary. Naveen journey in the field of data engineering has been a continuous learning, innovation, and a strong commitment to data integrity.

In PySpark, groupBy is used to collect the identical data into groups on the PySpark DataFrame and perform aggregate functions on the grouped data.

NNK October 31, Reply. If you find any syntax changes in Databricks please do comment, others might get benefit from your findings. Save my name, email, and website in this browser for the next time I comment. This example does group on department column and calculates sum and avg of salary for each department and calculates sum and max of bonus for each department. Groupby with DEPT with sum , min , max. Suggest Changes. Python Module — What are modules and packages in python? For example, to calculate the total salary expenditure for each department:. Our content is crafted by top technical writers with deep knowledge in the fields of computer science and data science, ensuring each piece is meticulously reviewed by a team of seasoned editors to guarantee compliance with the highest standards in educational content creation and publishing. Follow Naveen LinkedIn and Medium. Save my name, email, and website in this browser for the next time I comment.

0 thoughts on “Group by pyspark”