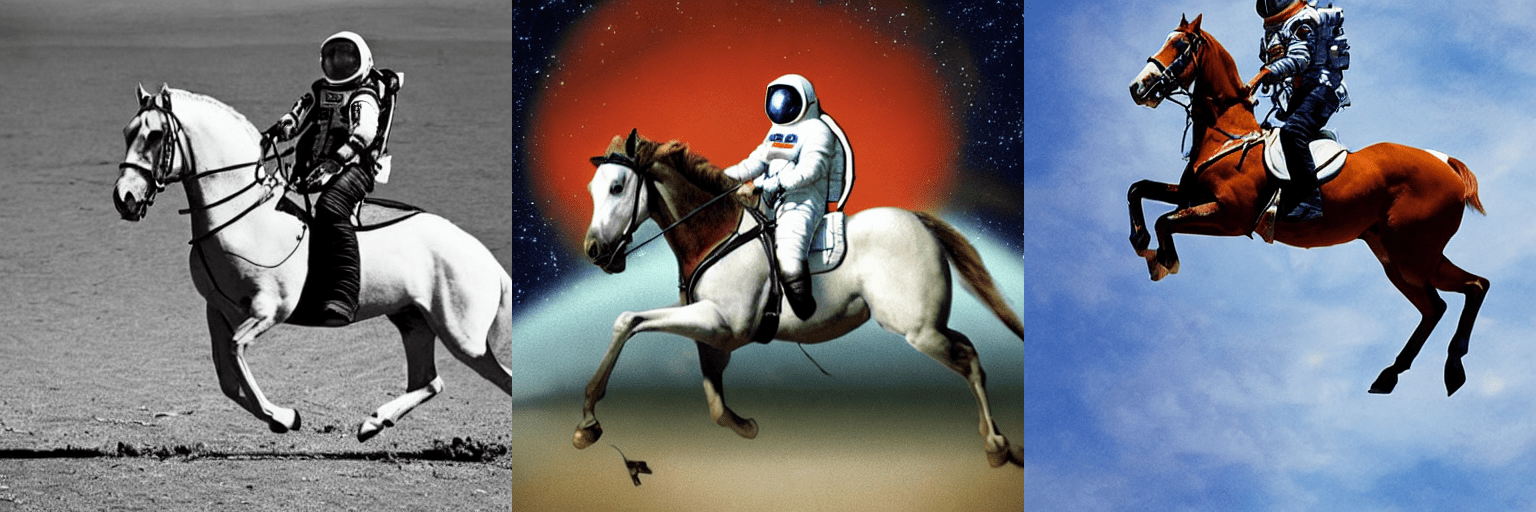

Huggingface stable diffusion

Stable Video Diffusion SVD is a powerful image-to-video generation model that can generate second high resolution x videos conditioned on an input image. This guide will show you how to use SVD to generate short videos from images. Before you begin, make sure you have the following libraries property 24. Huggingface stable diffusion reduce the memory requirement, huggingface stable diffusion, there are multiple options that trade-off inference speed for lower memory requirement:.

Welcome to this Hugging Face Inference Endpoints guide on how to deploy Stable Diffusion to generate images for a given input prompt. This guide will not explain how the model works. It supports all the Transformers and Sentence-Transformers tasks as well as diffusers tasks and any arbitrary ML Framework through easy customization by adding a custom inference handler. This custom inference handler can be used to implement simple inference pipelines for ML Frameworks like Keras, Tensorflow, and sci-kit learn or to add custom business logic to your existing transformers pipeline. The first step is to deploy our model as an Inference Endpoint. Therefore we add the Hugging face repository Id of the Stable Diffusion model we want to deploy. Note: If the repository is not showing up in the search it might be gated, e.

Huggingface stable diffusion

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. For more detailed instructions, use-cases and examples in JAX follow the instructions here. Follow instructions here. Model Description: This is a model that can be used to generate and modify images based on text prompts. Resources for more information: GitHub Repository , Paper. The model should not be used to intentionally create or disseminate images that create hostile or alienating environments for people. This includes generating images that people would foreseeably find disturbing, distressing, or offensive; or content that propagates historical or current stereotypes. The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model. Using the model to generate content that is cruel to individuals is a misuse of this model. This includes, but is not limited to:. While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases. Texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for. This affects the overall output of the model, as white and western cultures are often set as the default.

The concepts are passed into the model with the generated image and compared to a hand-engineered weight for each NSFW concept.

This model card focuses on the model associated with the Stable Diffusion v2 model, available here. This stable-diffusion-2 model is resumed from stable-diffusionbase base-ema. Resumed for another k steps on x images. Model Description: This is a model that can be used to generate and modify images based on text prompts. Resources for more information: GitHub Repository.

Our library is designed with a focus on usability over performance , simple over easy , and customizability over abstractions. Learn the fundamental skills you need to start generating outputs, build your own diffusion system, and train a diffusion model. Practical guides for helping you load pipelines, models, and schedulers. You'll also learn how to use pipelines for specific tasks, control how outputs are generated, optimize for inference speed, and different training techniques. Understand why the library was designed the way it was, and learn more about the ethical guidelines and safety implementations for using the library. Diffusers documentation Diffusers. Get started. Using Diffusers.

Huggingface stable diffusion

We present SDXL, a latent diffusion model for text-to-image synthesis. Compared to previous versions of Stable Diffusion, SDXL leverages a three times larger UNet backbone: The increase of model parameters is mainly due to more attention blocks and a larger cross-attention context as SDXL uses a second text encoder. We design multiple novel conditioning schemes and train SDXL on multiple aspect ratios. We also introduce a refinement model which is used to improve the visual fidelity of samples generated by SDXL using a post-hoc image-to-image technique. We demonstrate that SDXL shows drastically improved performance compared the previous versions of Stable Diffusion and achieves results competitive with those of black-box state-of-the-art image generators.

Babylon movie streaming

Conceptual Guides. Get started. Diffusers documentation Effective and efficient diffusion. You are viewing v0. See for example this nice blog post. Applications in educational or creative tools. Reinforcement Learning Audio Other Modalities. Overview Load pipelines, models, and schedulers Load and compare different schedulers Load community pipelines and components Load safetensors Load different Stable Diffusion formats Load adapters Push files to the Hub. Experimental Features. You can find the original codebase for Stable Diffusion v1. Join the Hugging Face community. You are viewing v0. Using the model to generate content that is cruel to individuals is a misuse of this model. Further, the ability of the model to generate content with non-English prompts is significantly worse than with English-language prompts. The default run we did above used full float32 precision and ran the default number of inference steps

Our library is designed with a focus on usability over performance , simple over easy , and customizability over abstractions. For more details about installing PyTorch and Flax , please refer to their official documentation. You can also dig into the models and schedulers toolbox to build your own diffusion system:.

During training, Images are encoded through an encoder, which turns images into latent representations. Before integrating the endpoint and model into our applications, we can demo and test the model directly in the UI. If you are looking for the weights to be loaded into the CompVis Stable Diffusion codebase, come here. By far most of the memory is taken up by the cross-attention layers. The watermark estimate is from the LAION-5B metadata, the aesthetics score is estimated using an improved aesthetics estimator. During training, Images are encoded through an encoder, which turns images into latent representations. Evaluations with different classifier-free guidance scales 1. Overall, we strongly recommend just trying the models out and reading up on advice online e. Choosing a more efficient scheduler could help us decrease the number of steps. Main Classes. Training Training Data The model developers used the following dataset for training the model: LAION-2B en and subsets thereof see next section Training Procedure Stable Diffusion v is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder.

Yes, it is the intelligible answer

You are not right. Write to me in PM.

I am ready to help you, set questions.