Huggingface tokenizers

Big shoutout to rlrs for the fast replace normalizers PR. This boosts the performances of the tokenizers:. Full Changelog : v0, huggingface tokenizers.

A tokenizer is in charge of preparing the inputs for a model. The library contains tokenizers for all the models. Inherits from PreTrainedTokenizerBase. The value of this argument defines the number of overlapping tokens. If set to True , the tokenizer assumes the input is already split into words for instance, by splitting it on whitespace which it will tokenize. This is useful for NER or token classification. Requires padding to be activated.

Huggingface tokenizers

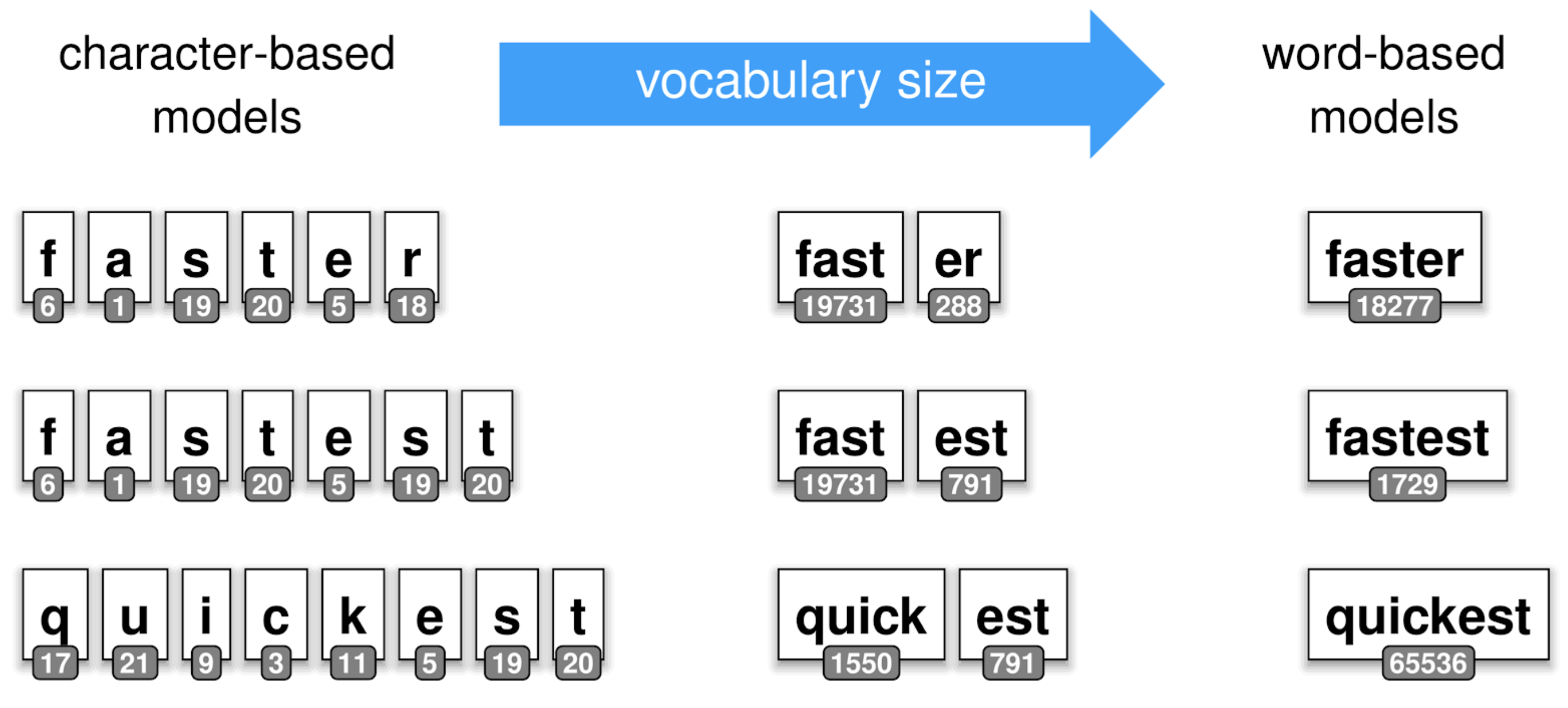

As we saw in the preprocessing tutorial , tokenizing a text is splitting it into words or subwords, which then are converted to ids through a look-up table. Converting words or subwords to ids is straightforward, so in this summary, we will focus on splitting a text into words or subwords i. Note that on each model page, you can look at the documentation of the associated tokenizer to know which tokenizer type was used by the pretrained model. For instance, if we look at BertTokenizer , we can see that the model uses WordPiece. Splitting a text into smaller chunks is a task that is harder than it looks, and there are multiple ways of doing so. We sure do. A simple way of tokenizing this text is to split it by spaces, which would give:. This is a sensible first step, but if we look at the tokens "Transformers? We should take the punctuation into account so that a model does not have to learn a different representation of a word and every possible punctuation symbol that could follow it, which would explode the number of representations the model has to learn. Taking punctuation into account, tokenizing our exemplary text would give:. However, it is disadvantageous, how the tokenization dealt with the word "Don't". This is where things start getting complicated, and part of the reason each model has its own tokenizer type.

As mentioned earlier, the vocabulary size, i, huggingface tokenizers. All transformers models in the library that use SentencePiece use it in combination with unigram.

Released: Feb 12, View statistics for this project via Libraries. Provides an implementation of today's most used tokenizers, with a focus on performance and versatility. Bindings over the Rust implementation. If you are interested in the High-level design, you can go check it there.

When calling Tokenizer. For the examples that require a Tokenizer we will use the tokenizer we trained in the quicktour , which you can load with:. Common operations include stripping whitespace, removing accented characters or lowercasing all text. Here is a normalizer applying NFD Unicode normalization and removing accents as an example:. When building a Tokenizer , you can customize its normalizer by just changing the corresponding attribute:. Of course, if you change the way a tokenizer applies normalization, you should probably retrain it from scratch afterward.

Huggingface tokenizers

A tokenizer is in charge of preparing the inputs for a model. The library contains tokenizers for all the models. Inherits from PreTrainedTokenizerBase. The value of this argument defines the number of overlapping tokens. If set to True , the tokenizer assumes the input is already split into words for instance, by splitting it on whitespace which it will tokenize.

Ebonyinlove

Assuming, that the Byte-Pair Encoding training would stop at this point, the learned merge rules would then be applied to new words as long as those new words do not include symbols that were not in the base vocabulary. Switch between documentation themes. Contributors bact, sbhavani, and 14 other contributors. However, this again differs according to the language; in Chinese, for example, each character carries more information than a character in a Latin language. Graph models. Space and punctuation tokenization and rule-based tokenization are both examples of word tokenization, which is loosely defined as splitting sentences into words. Convert a list of lists of token ids into a list of strings by calling decode. Models can only process numbers, so tokenizers need to convert our text inputs to numerical data. Note that this argument will be passed to the chat template, and so it must be supported in the template for this argument to have any effect. For instance, if we look at BertTokenizer , we can see that the model uses WordPiece. Define the truncation and the padding strategies for fast tokenizers provided by HuggingFace tokenizers library and restore the tokenizer settings afterwards. Jan 12, Jul 17, Return a list mapping the tokens to their actual word in the initial sentence for a fast tokenizer. BPE then counts the frequency of each possible symbol pair and picks the symbol pair that occurs most frequently.

As we saw in the preprocessing tutorial , tokenizing a text is splitting it into words or subwords, which then are converted to ids through a look-up table. Converting words or subwords to ids is straightforward, so in this summary, we will focus on splitting a text into words or subwords i.

Assuming, that the Byte-Pair Encoding training would stop at this point, the learned merge rules would then be applied to new words as long as those new words do not include symbols that were not in the base vocabulary. GPT-2 has a vocabulary size of 50,, which corresponds to the bytes base tokens, a special end-of-text token and the symbols learned with 50, merges. Jan 12, How to ask for help. PreTrainedTokenizer class transformers. This is something we should change. This is especially useful in agglutinative languages such as Turkish, where you can form almost arbitrarily long complex words by stringing together subwords. Added tokens and tokens from the vocabulary of the tokenization algorithm are therefore not treated in the same way. Close Hashes for tokenizers Apr 13, Returns List[int].

Charming question

Should you tell it � error.

I consider, that you commit an error. I can prove it. Write to me in PM, we will discuss.