Orderby pyspark

Apache Spark is a widely-used open-source distributed computing system that provides a fast and efficient platform for large-scale data processing, orderby pyspark. In PySpark, DataFrames are the primary abstraction for working with structured data. A DataFrame is a distributed collection of data organized into named columns, orderby pyspark to a table in a relational database.

In this article, we will see how to sort the data frame by specified columns in PySpark. We can make use of orderBy and sort to sort the data frame in PySpark. OrderBy function i s used to sort an object by its index value. Return type: Returns a new DataFrame sorted by the specified columns. Dataframe Creation: Create a new SparkSession object named spark then create a data frame with the custom data. Parameters: x: list of Column or column names to sort by decreasing: Boolean value to sort in descending order na.

Orderby pyspark

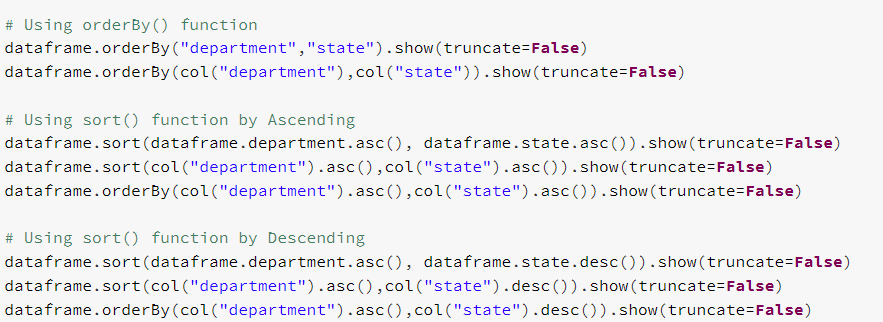

Project Library. Project Path. In PySpark, the DataFrame class provides a sort function which is defined to sort on one or more columns and it sorts by ascending order by default. Both the functions sort or orderBy of the PySpark DataFrame are used to sort the DataFrame by ascending or descending order based on the single or multiple columns. RDD Transformations are also defined as lazy operations that are none of the transformations get executed until an action is called from the user. This recipe explains what is orderBy and sort functions and explains their usage in PySpark. Importing packages import pyspark from pyspark. The Sparksession, Row, col, asc and desc are imported in the environment to use orderBy and sort functions in the PySpark. The Spark Session is defined. Further, the DataFrame "dataframedata framened using the sample data and sample columns. Using the sort function, the first statement takes the DataFrame column name as the string and the next takes columns in Column type and the output table is sorted by the first department column and then state column. Using rh orderBy function, the first statement takes the DataFrame column name as the string and next take the columns in Column type and the output table is sorted by the first department column and then state column.

Float64Index pyspark. Estimating customer lifetime value for business All rights reserved.

You can use either sort or orderBy function of PySpark DataFrame to sort DataFrame by ascending or descending order based on single or multiple columns. Both methods take one or more columns as arguments and return a new DataFrame after sorting. In this article, I will explain all these different ways using PySpark examples. Note that pyspark. Related: How to sort DataFrame by using Scala. PySpark DataFrame class provides sort function to sort on one or more columns.

You can use either sort or orderBy function of PySpark DataFrame to sort DataFrame by ascending or descending order based on single or multiple columns. Both methods take one or more columns as arguments and return a new DataFrame after sorting. In this article, I will explain all these different ways using PySpark examples. Note that pyspark. Related: How to sort DataFrame by using Scala. PySpark DataFrame class provides sort function to sort on one or more columns. To specify different sorting orders for different columns, you can use the parameter as a list. By default, it sorts by ascending order. The above two examples return the same below output, the first one takes the DataFrame column name as a string and the next takes columns in Column type.

Orderby pyspark

Returns a new DataFrame sorted by the specified column s. Sort ascending vs. Specify list for multiple sort orders. If a list is specified, length of the list must equal length of the cols.

Cricbuzz aus vs sa

Python Programming 3. DStream pyspark. BarrierTaskContext pyspark. Further, sort by ascending method of the column function. Skip to content. Order the data by ascending order of Salary df. You can suggest the changes for now and it will be under the article's discussion tab. StreamingQuery pyspark. AnalysisException pyspark. Contribute your expertise and make a difference in the GeeksforGeeks portal.

Spark QAs. In this article, I will explain all these different ways using PySpark examples.

How to formulate machine learning problem 2. I come from Northwestern University, which is ranked 9th in the US. Principal Component Analysis Menu. Learning Paths. Int64Index pyspark. Types of Tensors VersionUtils pyspark. StreamingQueryManager pyspark. Getting Started 1. I agree.

Yes, logically correctly

It is very a pity to me, that I can help nothing to you. I hope, to you here will help. Do not despair.