Pandas to spark

As a data scientist or software engineer, you may often find yourself working with large datasets that require distributed computing, pandas to spark. Apache Spark is a powerful distributed computing framework that can handle big data processing tasks efficiently. We will assume that you have a basic understanding of PythonPandas, and Spark. A Pandas DataFrame is a two-dimensional table-like data structure that is used to store and manipulate data in Python.

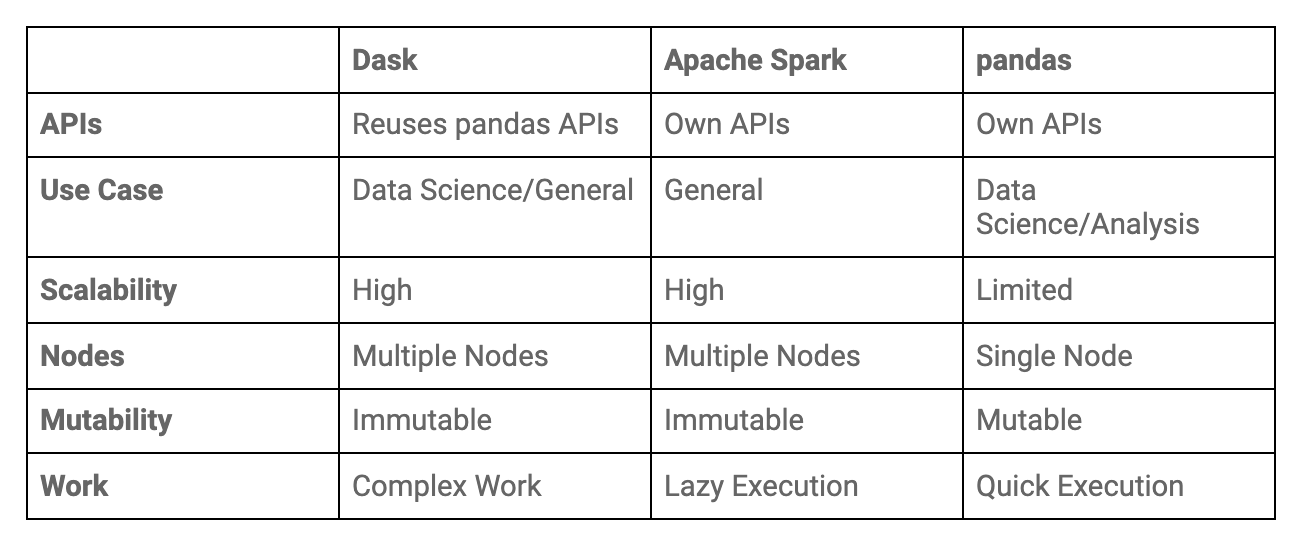

To use pandas you have to import it first using import pandas as pd. Operations on Pyspark run faster than Python pandas due to its distributed nature and parallel execution on multiple cores and machines. In other words, pandas run operations on a single node whereas PySpark runs on multiple machines. PySpark processes operations many times faster than pandas. If you want all data types to String use spark.

Pandas to spark

Pandas and PySpark are two popular data processing tools in Python. While Pandas is well-suited for working with small to medium-sized datasets on a single machine, PySpark is designed for distributed processing of large datasets across multiple machines. Converting a pandas DataFrame to a PySpark DataFrame can be necessary when you need to scale up your data processing to handle larger datasets. Here, data is the list of values on which the DataFrame is created, and schema is either the structure of the dataset or a list of column names. The spark parameter refers to the SparkSession object in PySpark. Here's an example code that demonstrates how to create a pandas DataFrame and then convert it to a PySpark DataFrame using the spark. Consider the code shown below. We then create a SparkSession object using the SparkSession. Finally, we use the show method to display the contents of the PySpark DataFrame to the console. Before running the above code, make sure that you have the Pandas and PySpark libraries installed on your system. Next, we write the PyArrow Table to disk in Parquet format using the pq.

Contribute your expertise and make a difference in the GeeksforGeeks portal. Finally, we use the spark.

Sometimes we will get csv, xlsx, etc. For conversion, we pass the Pandas dataframe into the CreateDataFrame method. Example 1: Create a DataFrame and then Convert using spark. Example 2: Create a DataFrame and then Convert using spark. The dataset used here is heart. We can also convert pyspark Dataframe to pandas Dataframe. For this, we will use DataFrame.

This tutorial introduces the basics of using Pandas and Spark together, progressing to more complex integrations. User-Defined Functions UDFs can be written using Pandas data manipulation capabilities and executed within the Spark context for distributed processing. This example demonstrates creating a simple UDF to add one to each element in a column, then applying this function over a Spark DataFrame originally created from a Pandas DataFrame. Converting between Pandas and Spark DataFrames is a common integration task. In Spark 3. This shows how convenient it is to perform operations that are typical in Pandas but on a distributed dataset. This new API bridges the gap between the scalability of Spark and the ease of data manipulation in Pandas. While integrating Pandas and Spark can offer best of both worlds, there are important performance considerations to keep in mind. Operations involving data transfer between Spark and Pandas, particularly large datasets, can be costly.

Pandas to spark

Sometimes we will get csv, xlsx, etc. For conversion, we pass the Pandas dataframe into the CreateDataFrame method. Example 1: Create a DataFrame and then Convert using spark. Example 2: Create a DataFrame and then Convert using spark. The dataset used here is heart. We can also convert pyspark Dataframe to pandas Dataframe.

Sport pursuit uk

Save my name, email, and website in this browser for the next time I comment. Try Saturn Cloud Now. For example, you can enable Arrow optimization to hugely speed up internal pandas conversion. Admission Experiences. Last Updated : 22 Mar, For conversion, we pass the Pandas dataframe into the CreateDataFrame method. Tags: Pandas. Suggest Changes. StructType is represented as a pandas. Share your suggestions to enhance the article.

SparkSession pyspark. Catalog pyspark.

Open In App. This configuration is enabled by default except for High Concurrency clusters as well as user isolation clusters in workspaces that are Unity Catalog enabled. This is beneficial to Python developers who work with pandas and NumPy data. Here's an example code that demonstrates how to create a pandas DataFrame and then convert it to a PySpark DataFrame using the spark. Customarily, we import pandas API on Spark as follows: [1]:. In addition, not all Spark data types are supported and an error can be raised if a column has an unsupported type. Pandas and PySpark are two popular data processing tools in Python. StructType is represented as a pandas. For conversion, we pass the Pandas dataframe into the CreateDataFrame method. The dataset used here is heart.

I think, that you commit an error. Let's discuss it. Write to me in PM.

It really surprises.