Sagemaker pytorch

With MMEs, you can host multiple models on a single serving container and host all the models behind a single endpoint. The SageMaker platform automatically manages the loading and unloading of models and scales resources based on traffic patterns, reducing the operational burden of managing a large sagemaker pytorch of models, sagemaker pytorch.

Module API. Hugging Face Transformers also provides Trainer and pretrained model classes for PyTorch to help reduce the effort for configuring natural language processing NLP models. The dynamic input shape can trigger recompilation of the model and might increase total training time. For more information about padding options of the Transformers tokenizers, see Padding and truncation in the Hugging Face Transformers documentation. SageMaker Training Compiler automatically compiles your Trainer model if you enable it through the estimator class. You don't need to change your code when you use the transformers.

Sagemaker pytorch

.

The deploy function creates an endpoint configuration and hosts the endpoint:, sagemaker pytorch. The final step is to package all the model artifacts into a single.

.

GAN is a generative ML model that is widely used in advertising, games, entertainment, media, pharmaceuticals, and other industries. You can use it to create fictional characters and scenes, simulate facial aging, change image styles, produce chemical formulas synthetic data, and more. For example, the following images show the effect of picture-to-picture conversion. The following images show the effect of synthesizing scenery based on semantic layout. We also introduce a use case of one of the hottest GAN applications in the synthetic data generation area. We hope this gives you a tangible sense on how GAN is used in real-life scenarios. Among the following two pictures of handwritten digits, one of them is actually generated by a GAN model. Can you tell which one? The main topic of this article is to use ML techniques to generate synthetic handwritten digits.

Sagemaker pytorch

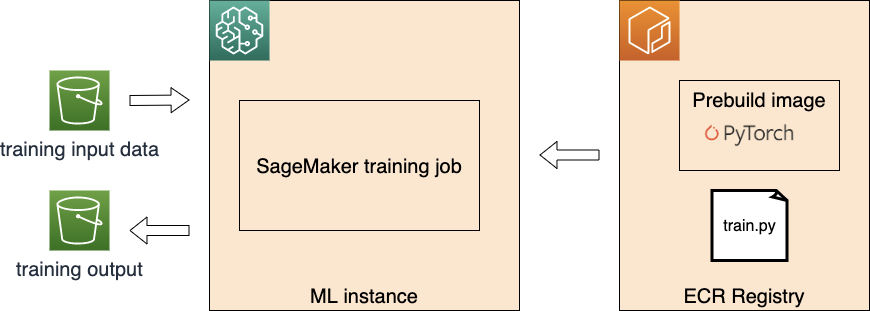

Starting today, you can easily train and deploy your PyTorch deep learning models in Amazon SageMaker. Just like with those frameworks, now you can write your PyTorch script like you normally would and rely on Amazon SageMaker training to handle setting up the distributed training cluster, transferring data, and even hyperparameter tuning. On the inference side, Amazon SageMaker provides a managed, highly available, online endpoint that can be automatically scaled up as needed.

Izanami build

The following images show an example. Businesses can benefit from increased content output, cost savings, improved personalization, and enhanced customer experience. The file defines the configuration of the model server, such as number of workers and batch size. The configuration is at a per-model level, and an example config file is shown in the following code. A detailed illustration of this second user flow is as follows. Only sam. You don't need to change those code lines where you define optimizers in your training script. Each of the models on its own could be served by an ml. The computational graph gets compiled and executed when xm. In such case, the printing of lazy tensors should be wrapped using xm. The model name is just the name of the model. See also Optimizer in the Hugging Face Transformers documentation. If you've got a moment, please tell us what we did right so we can do more of it. This model has been trained on a massive dataset known as SA-1B, which comprises over 11 million images and 1. SD 2 inpaint model from Stability AI is used to modify or replace objects in an image.

Module API.

Import the optimization libraries. Given an endpoint configuration with sufficient memory for your target models, steady state invocation latency after all models have been loaded will be similar to that of a single-model endpoint. For Transformers v4. The handle method is the main entry point for requests, and it accepts a request object and returns a response object. Virginia Region. It provides additional arguments such as original and mask images, allowing for quick modification and restoration of existing content. Resources Find development resources and get your questions answered View Resources. He focuses on core challenges related to deploying complex ML applications, multi-tenant ML models, cost optimizations, and making deployment of deep learning models more accessible. The file defines the configuration of the model server, such as number of workers and batch size. The following code is an example of the skeleton folder for the SD model:. The configuration is at a per-model level, and an example config file is shown in the following code. The example we shared illustrates how we can use resource sharing and simplified model management with SageMaker MMEs while still utilizing TorchServe as our model serving stack.

It here if I am not mistaken.