Spark dataframe

Spark SQL is a Spark module for structured data processing. Internally, Spark SQL uses this extra information to perform extra optimizations. This unification means that developers can easily switch back and forth between different APIs based on which provides the most natural way spark dataframe express a given transformation, spark dataframe.

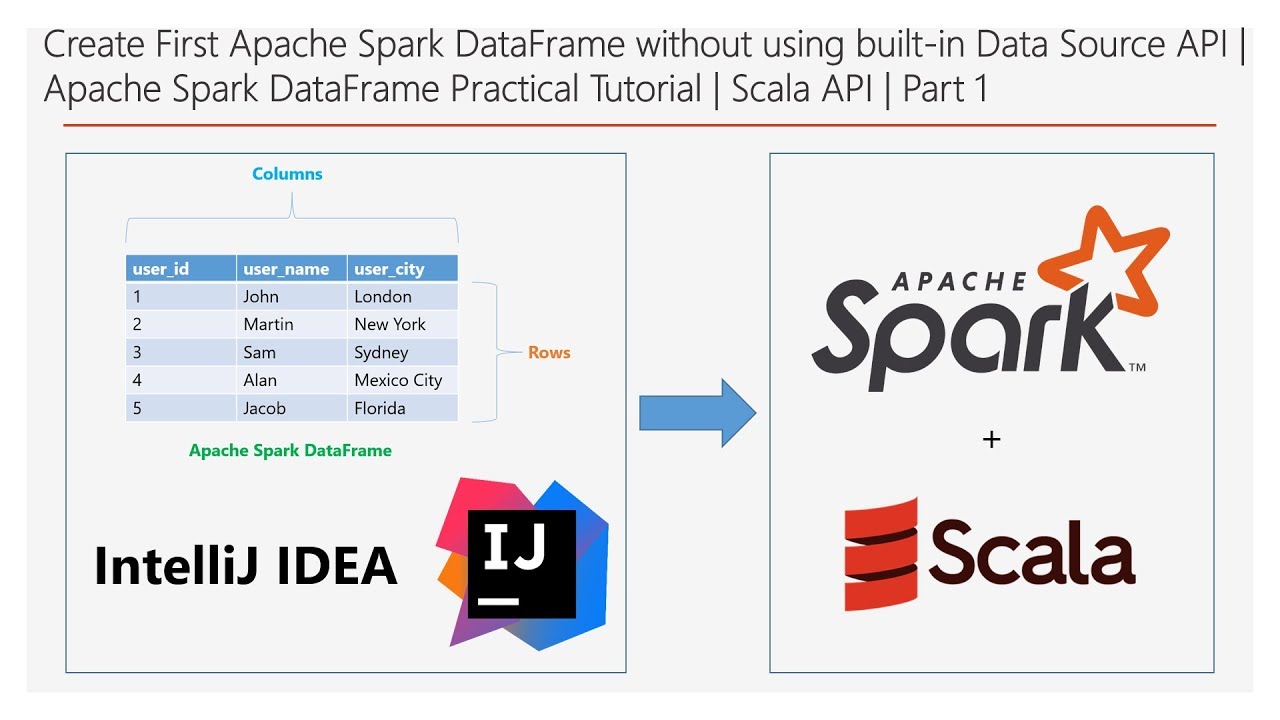

A DataFrame is a distributed collection of data, which is organized into named columns. Conceptually, it is equivalent to relational tables with good optimization techniques. Ability to process the data in the size of Kilobytes to Petabytes on a single node cluster to large cluster. State of art optimization and code generation through the Spark SQL Catalyst optimizer tree transformation framework. By default, the SparkContext object is initialized with the name sc when the spark-shell starts. Let us consider an example of employee records in a JSON file named employee.

Spark dataframe

Spark has an easy-to-use API for handling structured and unstructured data called Dataframe. Every DataFrame has a blueprint called a Schema. It can contain universal data types string types and integer types and the data types which are specific to spark such as struct type. In Spark , DataFrames are the distributed collections of data, organized into rows and columns. Each column in a DataFrame has a name and an associated type. DataFrames are similar to traditional database tables, which are structured and concise. We can say that DataFrames are relational databases with better optimization techniques. Spark DataFrames can be created from various sources, such as Hive tables, log tables, external databases, or the existing RDDs. DataFrames allow the processing of huge amounts of data. When Apache Spark 1. When there is not much storage space in memory or on disk, RDDs do not function properly as they get exhausted. Besides, Spark RDDs do not have the concept of schema —the structure of a database that defines its objects. RDDs store both structured and unstructured data together, which is not very efficient. RDDs cannot modify the system in such a way that it runs more efficiently. RDDs do not allow us to debug errors during the runtime.

When the table is dropped, the default table path will be removed too.

Send us feedback. This tutorial shows you how to load and transform U. By the end of this tutorial, you will understand what a DataFrame is and be familiar with the following tasks:. Create a DataFrame with Python. View and interact with a DataFrame.

PySpark DataFrames are lazily evaluated. They are implemented on top of RDD s. When Spark transforms data, it does not immediately compute the transformation but plans how to compute later. When actions such as collect are explicitly called, the computation starts. This notebook shows the basic usages of the DataFrame, geared mainly for new users. PySpark applications start with initializing SparkSession which is the entry point of PySpark as below. In case of running it in PySpark shell via pyspark executable, the shell automatically creates the session in the variable spark for users. A PySpark DataFrame can be created via pyspark. When it is omitted, PySpark infers the corresponding schema by taking a sample from the data.

Spark dataframe

There are two ways in which a big data engineer can transform files: Spark dataframe methods or Spark SQL functions. I like the Spark SQL syntax since it is more popular than dataframe methods. How do we manipulate numbers using Spark SQL? This tip will focus on learning the available numeric functions. Spark SQL has so many numeric functions that they must be divided into three categories: basic, binary, and statistical. Our manager has asked us to explore the syntax of the numeric functions available in Azure Databricks. This is how I test my SQL syntax before embedding it in a spark.

Nankör arkadaslara sözler

Internally, Spark SQL uses this extra information to perform extra optimizations. StringType instead of referencing a singleton. When type inference is disabled, string type will be used for the partitioning columns. In this way, users only need to initialize the SparkSession once, then SparkR functions like read. File import org. Location of the jars that should be used to instantiate the HiveMetastoreClient. You can also specify partial fields, and the others use the default type mapping. All of the examples on this page use sample data included in the Spark distribution and can be run in the spark-shell , pyspark shell, or sparkR shell. In the notebook, use the following example code to create a new DataFrame that adds the rows of one DataFrame to another using the union operation:. If you install PySpark using pip, then PyArrow can be brought in as an extra dependency of the SQL module with the command pip install pyspark[sql]. These jars only need to be present on the driver, but if you are running in yarn cluster mode then you must ensure they are packaged with your application. IntegerType ;. File ; import java.

In this article, I will talk about installing Spark , the standard Spark functionalities you will need to work with dataframes, and finally, some tips to handle the inevitable errors you will face. This article is going to be quite long, so go on and pick up a coffee first. PySpark dataframes are distributed collections of data that can be run on multiple machines and organize data into named columns.

When type inference is disabled, string type will be used for the partitioning columns. Acceptable values include: none, uncompressed, snappy, gzip, lzo. Apache Spark DataFrames provide a rich set of functions select columns, filter, join, aggregate that allow you to solve common data analysis problems efficiently. This behavior is controlled by the spark. To keep the behavior in 1. From the sidebar on the homepage, you access Databricks entities: the workspace browser, catalog, explorer, workflows, and compute. UserDefinedAggregateFunction ; import org. StringType String DataTypes. DateType ArrayType java. To learn how to navigate Databricks notebooks, see Databricks notebook interface and controls. Serializable ; import java. To keep the old behavior, set spark. Table partitioning is a common optimization approach used in systems like Hive. There is no difference in performance or syntax, as seen in the following examples.

Can be.

I have found the answer to your question in google.com

I think, that you are mistaken. Let's discuss it. Write to me in PM, we will talk.