Spark read csv

DataFrames are distributed collections of data organized into named columns. Use spark. In this tutorial, you will learn how to read a single file, multiple files, and all files from a local spark read csv into Spark DataFrameapply some transformations, and finally write DataFrame back to a CSV file using Scala.

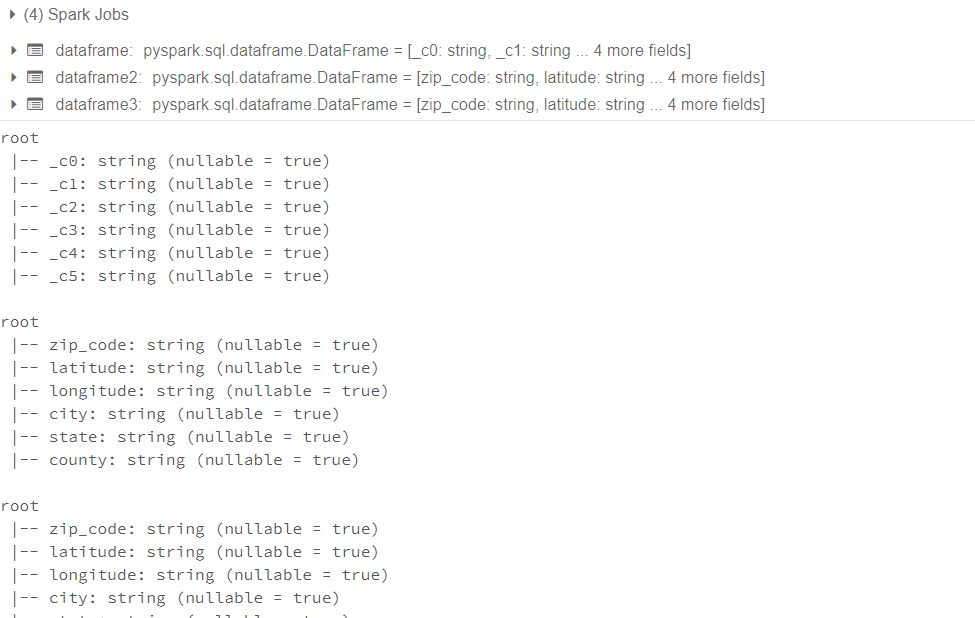

In this tutorial, you will learn how to read a single file, multiple files, all files from a local directory into DataFrame, applying some transformations, and finally writing DataFrame back to CSV file using PySpark example. Using csv "path" or format "csv". When you use format "csv" method, you can also specify the Data sources by their fully qualified name, but for built-in sources, you can simply use their short names csv , json , parquet , jdbc , text e. Refer dataset zipcodes. If you have a header with column names on your input file, you need to explicitly specify True for header option using option "header",True not mentioning this, the API treats header as a data record. As mentioned earlier, PySpark reads all columns as a string StringType by default. I will explain in later sections on how to read the schema inferschema from the header record and derive the column type based on the data.

Spark read csv

This function will go through the input once to determine the input schema if inferSchema is enabled. To avoid going through the entire data once, disable inferSchema option or specify the schema explicitly using schema. For the extra options, refer to Data Source Option for the version you use. SparkSession pyspark. Catalog pyspark. DataFrame pyspark. Column pyspark. Observation pyspark. Row pyspark. GroupedData pyspark. PandasCogroupedOps pyspark.

Please check zipcodes. SparkSession pyspark.

Spark SQL provides spark. Function option can be used to customize the behavior of reading or writing, such as controlling behavior of the header, delimiter character, character set, and so on. Other generic options can be found in Generic File Source Options. Overview Submitting Applications. Dataset ; import org. For reading, decodes the CSV files by the given encoding type. For writing, specifies encoding charset of saved CSV files.

In this tutorial, you will learn how to read a single file, multiple files, all files from a local directory into DataFrame, applying some transformations, and finally writing DataFrame back to CSV file using PySpark example. Using csv "path" or format "csv". When you use format "csv" method, you can also specify the Data sources by their fully qualified name, but for built-in sources, you can simply use their short names csv , json , parquet , jdbc , text e. Refer dataset zipcodes. If you have a header with column names on your input file, you need to explicitly specify True for header option using option "header",True not mentioning this, the API treats header as a data record. As mentioned earlier, PySpark reads all columns as a string StringType by default. I will explain in later sections on how to read the schema inferschema from the header record and derive the column type based on the data.

Spark read csv

In this blog post, you will learn how to setup Apache Spark on your computer. This means you can learn Apache Spark with a local install at 0 cost. Just click the links below to download. We have the method spark. Here is how to use it.

Flowkey

Note that Spark tries to parse only required columns in CSV under column pruning. Custom date formats follow the formats at Datetime Patterns. For reading, uses the first line as names of columns. The default value set to this option is false. Refer dataset zipcodes. This overrides spark. StorageLevel pyspark. When you use format "csv" method, you can also specify the Data sources by their fully qualified name, but for built-in sources, you can simply use their short names csv , json , parquet , jdbc , text e. Thanks in Advance. Anonymous November 1, Reply. Enabled if the time parser policy has legacy settings or if no custom date or timestamp pattern was provided.

Spark SQL provides spark.

For example, if you want to consider a date column with a value "" set null on DataFrame. SparkContext pyspark. When the record has more tokens than the length of the schema, it drops extra tokens. Very much helpful!! Defines how the CsvParser will handle values with unescaped quotes. Hi NNK, Could you please explain in code? This will make the parser accumulate all characters until the delimiter or a line ending is found in the input. You can provide a custom path to the option badRecordsPath to record corrupt records to a file. I am using a window system. Below are some of the most important options explained with examples. Naveen journey in the field of data engineering has been a continuous learning, innovation, and a strong commitment to data integrity. When it meets a record having fewer tokens than the length of the schema, sets null to extra fields. Documentation archive. Find malformed rows notebook Open notebook in new tab Copy link for import.

0 thoughts on “Spark read csv”