Stable diffusion huggingface

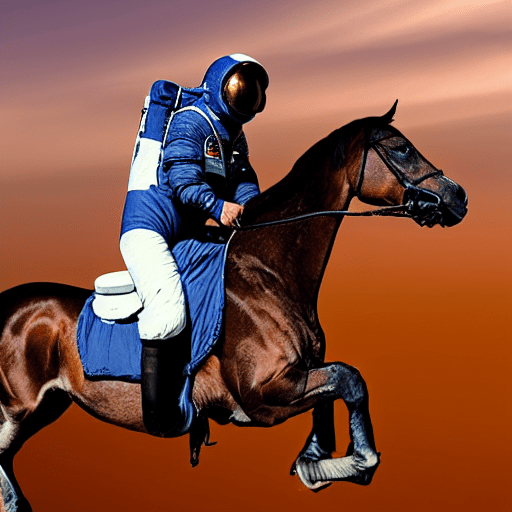

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. For more detailed instructions, use-cases and examples in JAX follow the instructions here. Follow instructions here.

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. This model card gives an overview of all available model checkpoints. For more in-detail model cards, please have a look at the model repositories listed under Model Access. For the first version 4 model checkpoints are released. Higher versions have been trained for longer and are thus usually better in terms of image generation quality then lower versions.

Stable diffusion huggingface

For more information, you can check out the official blog post. Since its public release the community has done an incredible job at working together to make the stable diffusion checkpoints faster , more memory efficient , and more performant. This notebook walks you through the improvements one-by-one so you can best leverage StableDiffusionPipeline for inference. So to begin with, it is most important to speed up stable diffusion as much as possible to generate as many pictures as possible in a given amount of time. We aim at generating a beautiful photograph of an old warrior chief and will later try to find the best prompt to generate such a photograph. See the documentation on reproducibility here for more information. The default run we did above used full float32 precision and ran the default number of inference steps The easiest speed-ups come from switching to float16 or half precision and simply running fewer inference steps. We strongly suggest always running your pipelines in float16 as so far we have very rarely seen degradations in quality because of it. The number of inference steps is associated with the denoising scheduler we use. Choosing a more efficient scheduler could help us decrease the number of steps.

Since the release of Stable Diffusion, many improved versions have been released, which are summarized here:. Choosing a more efficient scheduler could help us decrease the number of steps.

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. If you are looking for the weights to be loaded into the CompVis Stable Diffusion codebase, come here. Model Description: This is a model that can be used to generate and modify images based on text prompts. Resources for more information: GitHub Repository , Paper. You can do so by telling diffusers to expect the weights to be in float16 precision:. Note : If you are limited by TPU memory, please make sure to load the FlaxStableDiffusionPipeline in bfloat16 precision instead of the default float32 precision as done above.

This repository contains Stable Diffusion models trained from scratch and will be continuously updated with new checkpoints. The following list provides an overview of all currently available models. More coming soon. Instructions are available here. New stable diffusion model Stable Diffusion 2.

Stable diffusion huggingface

Latent diffusion applies the diffusion process over a lower dimensional latent space to reduce memory and compute complexity. For more details about how Stable Diffusion works and how it differs from the base latent diffusion model, take a look at the Stability AI announcement and our own blog post for more technical details. You can find the original codebase for Stable Diffusion v1. Explore these organizations to find the best checkpoint for your use-case! The table below summarizes the available Stable Diffusion pipelines, their supported tasks, and an interactive demo:.

Sarileru neekevvaru hindi name

Misuse and Malicious Use Using the model to generate content that is cruel to individuals is a misuse of this model. The output of the text encoder is fed into the UNet backbone of the latent diffusion model via cross-attention. For inpainting, the UNet has 5 additional input channels 4 for the encoded masked-image and 1 for the mask itself whose weights were zero-initialized after restoring the non-inpainting checkpoint. The hardware, runtime, cloud provider, and compute region were utilized to estimate the carbon impact. View all files. We aim at generating a beautiful photograph of an old warrior chief and will later try to find the best prompt to generate such a photograph. You can do so by telling diffusers to load the weights from "bf16" branch. Training Training Data The model developers used the following dataset for training the model: LAION-2B en and subsets thereof see next section Training Procedure Stable Diffusion v is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. Custom properties. Choosing a more efficient scheduler could help us decrease the number of steps.

Why is this important? The smaller the latent space, the faster you can run inference and the cheaper the training becomes. How small is the latent space?

Stable Diffusion Upscale. Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. Excluded uses are described below. Our goal was to generate a photo of an old warrior chief. For more information on optimization or other guides, I recommend taking a look at the following:. The autoencoding part of the model is lossy The model was trained on a large-scale dataset LAION-5B which contains adult material and is not fit for product use without additional safety mechanisms and considerations. During training,. You can do so by telling diffusers to expect the weights to be in float16 precision:. No additional measures were used to deduplicate the dataset. Dismiss alert. Currently six Stable Diffusion checkpoints are provided, which were trained as follows. Textual inversion Distributed inference with multiple GPUs Improve image quality with deterministic generation Control image brightness Prompt weighting Improve generation quality with FreeU. Skip to content. Further, the ability of the model to generate content with non-English prompts is significantly worse than with English-language prompts. If you are looking for the weights to be loaded into the CompVis Stable Diffusion codebase, come here.

Bravo, what necessary words..., a remarkable idea