Tacotron 2 online

Click here to download the full example code.

Tacotron 2 - PyTorch implementation with faster-than-realtime inference. This implementation includes distributed and automatic mixed precision support and uses the LJSpeech dataset. Visit our website for audio samples using our published Tacotron 2 and WaveGlow models. Training using a pre-trained model can lead to faster convergence By default, the dataset dependent text embedding layers are ignored. When performing Mel-Spectrogram to Audio synthesis, make sure Tacotron 2 and the Mel decoder were trained on the same mel-spectrogram representation. This implementation uses code from the following repos: Keith Ito , Prem Seetharaman as described in our code.

Tacotron 2 online

Tensorflow implementation of DeepMind's Tacotron Suggested hparams. Feel free to toy with the parameters as needed. The previous tree shows the current state of the repository separate training, one step at a time. Step 1 : Preprocess your data. Step 2 : Train your Tacotron model. Yields the logs-Tacotron folder. Step 4 : Train your Wavenet model. Yield the logs-Wavenet folder. Step 5 : Synthesize audio using the Wavenet model.

Go to file.

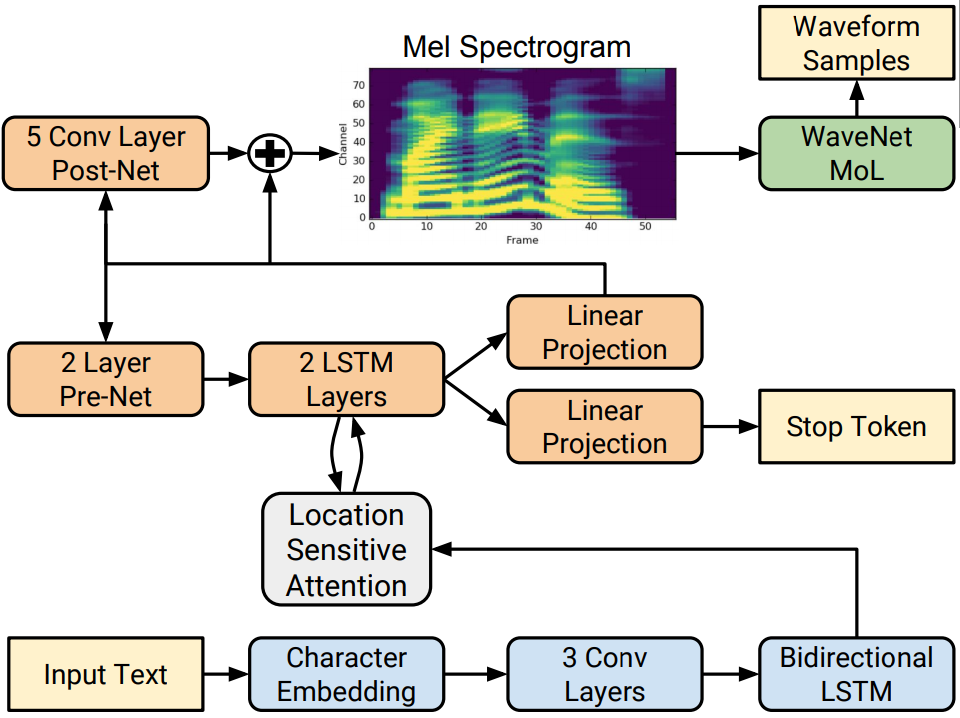

The Tacotron 2 and WaveGlow model form a text-to-speech system that enables user to synthesise a natural sounding speech from raw transcripts without any additional prosody information. The Tacotron 2 model produces mel spectrograms from input text using encoder-decoder architecture. WaveGlow also available via torch. This implementation of Tacotron 2 model differs from the model described in the paper. To run the example you need some extra python packages installed. Load the Tacotron2 model pre-trained on LJ Speech dataset and prepare it for inference:.

Tacotron 2 - PyTorch implementation with faster-than-realtime inference. This implementation includes distributed and automatic mixed precision support and uses the LJSpeech dataset. Visit our website for audio samples using our published Tacotron 2 and WaveGlow models. Training using a pre-trained model can lead to faster convergence By default, the dataset dependent text embedding layers are ignored. When performing Mel-Spectrogram to Audio synthesis, make sure Tacotron 2 and the Mel decoder were trained on the same mel-spectrogram representation.

Tacotron 2 online

The Tacotron 2 and WaveGlow model form a text-to-speech system that enables user to synthesise a natural sounding speech from raw transcripts without any additional prosody information. The Tacotron 2 model produces mel spectrograms from input text using encoder-decoder architecture. WaveGlow also available via torch. This implementation of Tacotron 2 model differs from the model described in the paper. To run the example you need some extra python packages installed. Load the Tacotron2 model pre-trained on LJ Speech dataset and prepare it for inference:. To analyze traffic and optimize your experience, we serve cookies on this site. By clicking or navigating, you agree to allow our usage of cookies. Learn more, including about available controls: Cookies Policy.

Marling yoga

Packages 0 No packages published. Feature prediction model can separately be trained using:. The process to generate speech from spectrogram is also called Vocoder. By clicking or navigating, you agree to allow our usage of cookies. Go to file. Current state:. History Commits. The Tacotron 2 model produces mel spectrograms from input text using encoder-decoder architecture. Before running the following steps, please make sure you are inside Tacotron-2 folder. Behind the scene, a G2P model is created using DeepPhonemizer package, and the pretrained weights published by the author of DeepPhonemizer is fetched.

Saurous, Yannis Agiomyrgiannakis, Yonghui Wu.

Tacotron 2 is somewhat robust to spelling errors. In the following examples, one is generated by Tacotron 2, and one is the recording of a human, but which is which? By clicking or navigating, you agree to allow our usage of cookies. Go to file. As mentioned in the above, the symbol table and indices must match what the pretrained Tacotron2 model expects. Skip to content. How to start. Skip to content. When a list of texts are provided, the returned lengths variable represents the valid length of each processed tokens in the output batch. Download Notebook. Continuing from the previous section, we can instantiate the matching WaveRNN model from the same bundle. To run the example you need some extra python packages installed. Reload to refresh your session. Community stories Learn how our community solves real, everyday machine learning problems with PyTorch Developer Resources Find resources and get questions answered Events Find events, webinars, and podcasts Forums A place to discuss PyTorch code, issues, install, research Models Beta Discover, publish, and reuse pre-trained models. The pretrained weights are published on Torch Hub.

I precisely know, what is it � an error.

Where I can read about it?

Certainly. All above told the truth. We can communicate on this theme.