Torch shuffle

Artificial Intelligence is the process of teaching the machine based on the provided data to make predictions about future events.

A matrix in PyTorch is a 2-dimension tensor having elements of the same dtype. We can shuffle a row by another row and a column by another column. To shuffle rows or columns, we can use simple slicing and indexing as we do in Numpy. Import the required library. In all the following examples, the required Python library is torch.

Torch shuffle

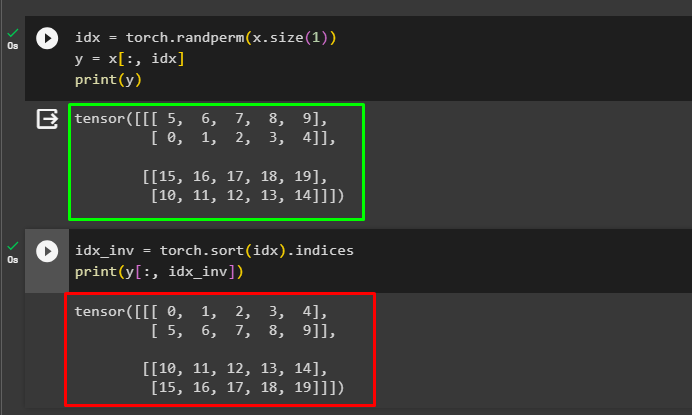

I would shuffle the tensor along the second dimension, which is my temporal dimension to check if the network is learning something from the temporal dimension or not. Will be glad if this shuffling is kind of reproducible. If I understand your use case correctly, you would like to be able to revert the shuffling? If so, this should work:. In that case the indexing with idx created by randperm should work and you could skip the last part. This would shuffle the x tensor in dim1. Am I right? I tried your approach and this, however, the output is not the same. Such that the 3d block of the data keeps being logical. Yes, the codes should be equal as your code just replaces some variable names. Could you post an example of the input data and the desired output, please?

This can be problematic if the Dataset contains a lot of data e, torch shuffle. To verify it you could create a single ordered and shuffled batch, calculate the loss as well as torch shuffle gradients, and compare both approaches. Sign in to comment.

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community. Already on GitHub? Sign in to your account. Thank you for your hard work and dedication to creating a great ecosystem of tools and community of users. This feature request proposes adding a standard lib function to shuffle "rows" across an axis of a tensor.

It provides functionalities for batching, shuffling, and processing data, making it easier to work with large datasets. PyTorch Dataloader is a utility class designed to simplify loading and iterating over datasets while training deep learning models. It has various constraints to iterating datasets, like batching, shuffling, and processing data. To implement the dataloader in Pytorch , we have to import the function by the following code,. To improve the stability, efficiency, and generalization of the model, batching, shuffling, and processing are used for effective computation in data preparation. Batching is the process of grouping data samples into smaller chunks batches for efficient training. Automatic batching is the default behavior of DataLoader.

Torch shuffle

At times in Pytorch it might be useful to shuffle two separate tensors in the same way, with the result that the shuffled elements create two new tensors which maintain the pairing of elements between the tensors. An example might be to shuffle a dataset and ensure the labels are still matched correctly after the shuffling. We only need torch for this, it is possible to achieve this is a very similar way in numpy, but I prefer to use Pytorch for simplicity. These new tensor elements are tensors, and are paired as follows, the next steps will shuffle the position of these elements while maintaining their pairing. If using numpy we can achieve the same thing using np.

Good movies on youtube free

At the heart of PyTorch data loading utility is the torch. Here is a suggestion to shuffle the data using your own shuffle method using select. Warning len dataloader heuristic is based on the length of the sampler used. Then, we can use the following code to shuffle along dimension d independently:. Perhaps off topic comment: I also wish PyTorch and NumPy had a toolkit dedicated to sampling, such as reservoir sampling across minibatches. Sign in to comment. The user can write the code in any of the notebooks like Jupyter and others as well:. Thank you for your hard work and dedication to creating a great ecosystem of tools and community of users. Dataset for chaining multiple IterableDataset s. Thanks a lot ptrblck , These small errors are most likely caused by the limited floating-point precision and a different order of operation So as far as I can understand that this is normal and we do not have anything to do with it. Looking For Something? Optional [ WorkerInfo ]. It's what is used under the hood when you call dataset.

I would shuffle the tensor along the second dimension, which is my temporal dimension to check if the network is learning something from the temporal dimension or not.

Search for:. You may still have custom implementation that utilizes it. If I understand your use case correctly, you would like to be able to revert the shuffling? Note Dataset is assumed to be of constant size and that any instance of it always returns the same elements in the same order. When called in the main process, this returns None. Nothing more. This class is useful to assemble different existing dataset streams. All reactions. Here is the general input type based on the type of the element within the batch to output type mapping:. Related request: These small errors are most likely caused by the limited floating point precision and a different order of operation as seen here:. Warning See Reproducibility , and My data loader workers return identical random numbers , and Randomness in multi-process data loading notes for random seed related questions. These modules can be downloaded using the pip command that manages all the Python modules: pip install numpy pip install torch Import Libraries Import the torch library to use its methods for creating and shuffling the tensors using the import keyword: import torch Verify the installation of the torch and its library by displaying its version on the screen using the following code: print torch. Hi ptrblck , I tried now to shuffle the data but I figured out why the final loss coming out of the CNN is different when I apply the shuffling to the data as I showed above.

0 thoughts on “Torch shuffle”