Torch sum

Have a question about this project?

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community. Already on GitHub? Sign in to your account. This causes nlp sampling to be impossible.

Torch sum

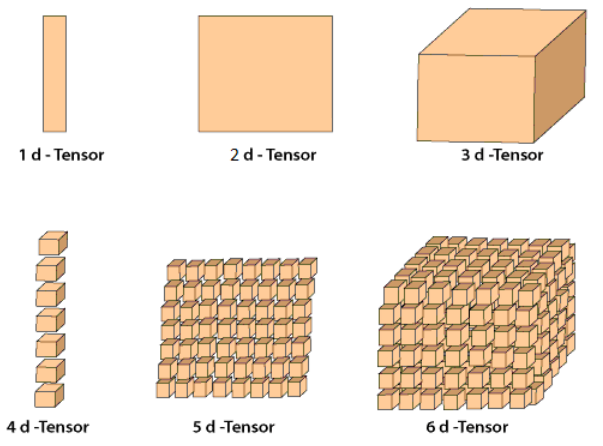

In this tutorial, we will do an in-depth understanding of how to use torch. We will first understand its syntax and then cover its functionalities with various examples and illustrations to make it easy for beginners. The torch sum function is used to sum up the elements inside the tensor in PyTorch along a given dimension or axis. On the surface, this may look like a very easy function but it does not work in an intuitive manner, thus giving headaches to beginners. In this example, torch. Hence the resulting tensor is 1-Dimensional. Again we start by creating a 2-Dimensional tensor of the size 2x2x3 that will be used in subsequent examples of torch sum function. Hence the resulting tensor is a scaler. Hence the resulting tensor is 2-Dimensional. MLK is a knowledge sharing community platform for machine learning enthusiasts, beginners and experts. Let us create a powerful hub together to Make AI Simple for everyone. View all posts. Your email address will not be published. Save my name, email, and website in this browser for the next time I comment.

Leave a Reply Cancel reply Your email address will not be published.

.

In short, if a PyTorch operation supports broadcast, then its Tensor arguments can be automatically expanded to be of equal sizes without making copies of the data. When iterating over the dimension sizes, starting at the trailing dimension, the dimension sizes must either be equal, one of them is 1, or one of them does not exist. If the number of dimensions of x and y are not equal, prepend 1 to the dimensions of the tensor with fewer dimensions to make them equal length. Then, for each dimension size, the resulting dimension size is the max of the sizes of x and y along that dimension. One complication is that in-place operations do not allow the in-place tensor to change shape as a result of the broadcast. Prior versions of PyTorch allowed certain pointwise functions to execute on tensors with different shapes, as long as the number of elements in each tensor was equal. The pointwise operation would then be carried out by viewing each tensor as 1-dimensional. Note that the introduction of broadcasting can cause backwards incompatible changes in the case where two tensors do not have the same shape, but are broadcastable and have the same number of elements.

Torch sum

The distributions package contains parameterizable probability distributions and sampling functions. This allows the construction of stochastic computation graphs and stochastic gradient estimators for optimization. This package generally follows the design of the TensorFlow Distributions package. It is not possible to directly backpropagate through random samples. However, there are two main methods for creating surrogate functions that can be backpropagated through. REINFORCE is commonly seen as the basis for policy gradient methods in reinforcement learning, and the pathwise derivative estimator is commonly seen in the reparameterization trick in variational autoencoders. The next sections discuss these two in a reinforcement learning example.

Chris sturniolo girlfriend

We encourage everyone following this ticket to try the latest available nightly wheel newer than of Nov 8 which should have the above PR merged, and of course please report back to us if the issue remains. The Intel PCH does not carry atomics. The following is collected from our internal dev V system that appears to be functioning well. MLK is a knowledge sharing community platform for machine learning enthusiasts, beginners and experts. The issue is fixed by All reactions. Update on "Add sparse COO tensor support to torch. Skip to content. View all posts. Yes I am Curious. Reload to refresh your session. Can you run the following commands and send out output:. Follow US. Sorry, I forgot.

I want to sum them up and backpropagate error. All the errors are single float values and of same scale. So the total loss is the sum of individual losses.

This is because of torch. This was referenced Jan 23, PyTorch on ROCm. Versions Collecting environment information Some links in our website may be affiliate links which means if you make any purchase through them we earn a little commission on it. Alternatives No response Additional context No response cc nikitaved pearu cpuhrsch amjames bhosmer. I was able to make torch. Sign up for a free GitHub account to open an issue and contact its maintainers and the community. Sign in to comment. Should the PCIE atomics support be tested at runtime by the driver, so it doesn't return a wrong result? Projects Sparse tensors. Size [4]. This is simply the first item in the tensor. Update on "Add sparse COO tensor support to torch. Use with caution in scripts.

Very valuable message