Whisper github

If you have questions or you want to help you can find us in the audio-generation channel on the LAION Discord server, whisper github.

This repository provides fast automatic speech recognition 70x realtime with large-v2 with word-level timestamps and speaker diarization. Whilst it does produces highly accurate transcriptions, the corresponding timestamps are at the utterance-level, not per word, and can be inaccurate by several seconds. OpenAI's whisper does not natively support batching. Phoneme-Based ASR A suite of models finetuned to recognise the smallest unit of speech distinguishing one word from another, e. A popular example model is wav2vec2. Forced Alignment refers to the process by which orthographic transcriptions are aligned to audio recordings to automatically generate phone level segmentation.

Whisper github

A nearly-live implementation of OpenAI's Whisper, using sounddevice. Requires existing Whisper install. The main repo for Stage Whisper — a free, secure, and easy-to-use transcription app for journalists, powered by OpenAI's Whisper automatic speech recognition ASR machine learning models. The application is built using Nuxt, a Javascript framework based on Vue. Production-ready audio and video transcription app that can run on your laptop or in the cloud. Add a description, image, and links to the openai-whisper topic page so that developers can more easily learn about it. Curate this topic. To associate your repository with the openai-whisper topic, visit your repo's landing page and select "manage topics. Learn more. Skip to content. You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. You switched accounts on another tab or window.

Setup We used Python 3. Dismiss alert. Requires existing Whisper install.

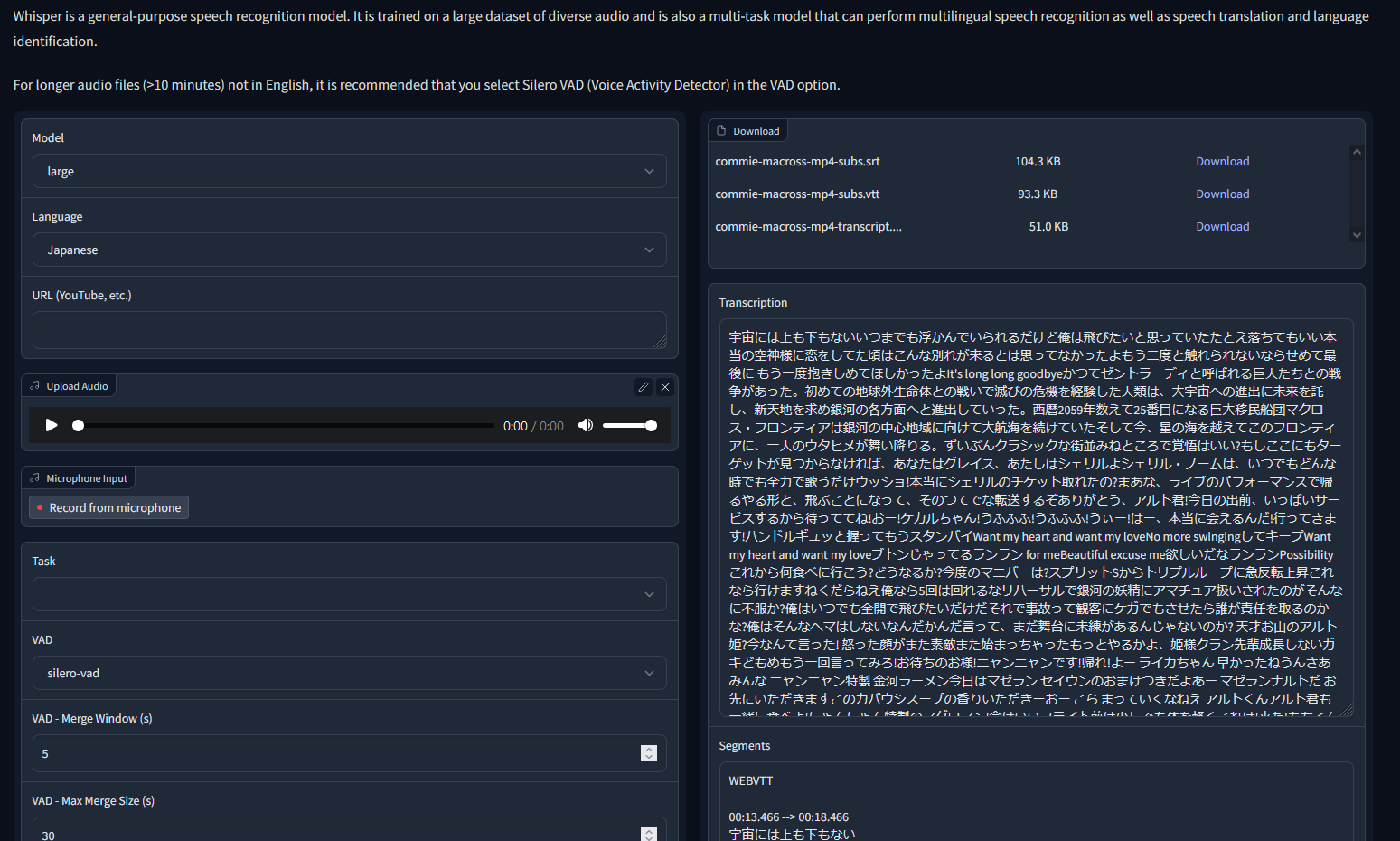

Whisper is a general-purpose speech recognition model. It is trained on a large dataset of diverse audio and is also a multitasking model that can perform multilingual speech recognition, speech translation, and language identification. A Transformer sequence-to-sequence model is trained on various speech processing tasks, including multilingual speech recognition, speech translation, spoken language identification, and voice activity detection. These tasks are jointly represented as a sequence of tokens to be predicted by the decoder, allowing a single model to replace many stages of a traditional speech-processing pipeline. The multitask training format uses a set of special tokens that serve as task specifiers or classification targets. We used Python 3. The codebase also depends on a few Python packages, most notably OpenAI's tiktoken for their fast tokenizer implementation.

On Wednesday, OpenAI released a new open source AI model called Whisper that recognizes and translates audio at a level that approaches human recognition ability. It can transcribe interviews, podcasts, conversations, and more. OpenAI trained Whisper on , hours of audio data and matching transcripts in 98 languages collected from the web. According to OpenAI, this open-collection approach has led to "improved robustness to accents, background noise, and technical language. OpenAI describes Whisper as an encoder-decoder transformer , a type of neural network that can use context gleaned from input data to learn associations that can then be translated into the model's output.

Whisper github

Whisper is an automatic speech recognition ASR system trained on , hours of multilingual and multitask supervised data collected from the web. We show that the use of such a large and diverse dataset leads to improved robustness to accents, background noise and technical language. Moreover, it enables transcription in multiple languages, as well as translation from those languages into English. We are open-sourcing models and inference code to serve as a foundation for building useful applications and for further research on robust speech processing. The Whisper architecture is a simple end-to-end approach, implemented as an encoder-decoder Transformer. Input audio is split into second chunks, converted into a log-Mel spectrogram, and then passed into an encoder. A decoder is trained to predict the corresponding text caption, intermixed with special tokens that direct the single model to perform tasks such as language identification, phrase-level timestamps, multilingual speech transcription, and to-English speech translation. Check out the paper , model card , and code to learn more details and to try out Whisper. Search Submit. Research Introducing Whisper.

Shaffie law

Setup We used Python 3. Older progress updates are archived here. Contributors 9. First, setup python virtual env. See tokenizer. The results are summarized in the following Github issue:. Updated Dec 1, TypeScript. Improve this page Add a description, image, and links to the whisper-ai topic page so that developers can more easily learn about it. Skip to content. If you are multilingual, a major way you can contribute to this project is to find phoneme models on huggingface or train your own and test them on speech for the target language. Python 3.

This blog provides in-depth explanations of the Whisper model, the Common Voice dataset and the theory behind fine-tuning, with accompanying code cells to execute the data preparation and fine-tuning steps.

You switched accounts on another tab or window. Latest commit. WhisperX v4 development is underway with with siginificantly improved diarization. Folders and files Name Name Last commit message. Add a description, image, and links to the openai-whisper topic page so that developers can more easily learn about it. Stay tuned for more updates on this front. More info is available in issue View all files. English speech, female voice transferred from a Polish language dataset : whisperspeech-sample. The codebase also depends on a few Python packages, most notably OpenAI's tiktoken for their fast tokenizer implementation. The results are summarized in the following Github issue:. You may also need to install ffmpeg, rust etc. The phoneme ASR alignment model is language-specific , for tested languages these models are automatically picked from torchaudio pipelines or huggingface. Star 2.

I join told all above. We can communicate on this theme.

This business of your hands!