Withcolumn in pyspark

Returns a new DataFrame by adding multiple columns withcolumn in pyspark replacing the existing columns that have the same names. The colsMap is a map of column name and column, the column must only refer to attributes supplied by this Dataset. It is an error to add columns that refer to some other Dataset.

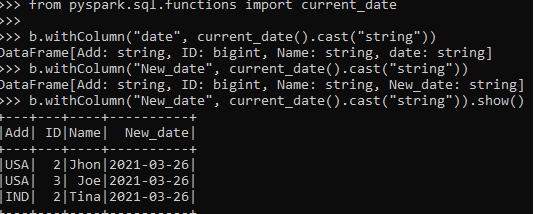

Project Library. Project Path. In PySpark, the withColumn function is widely used and defined as the transformation function of the DataFrame which is further used to change the value, convert the datatype of an existing column, create the new column etc. The PySpark withColumn on the DataFrame, the casting or changing the data type of the column can be done using the cast function. The PySpark withColumn function of DataFrame can also be used to change the value of an existing column by passing an existing column name as the first argument and the value to be assigned as the second argument to the withColumn function and the second argument should be the Column type.

Withcolumn in pyspark

It is a DataFrame transformation operation, meaning it returns a new DataFrame with the specified changes, without altering the original DataFrame. Tell us how we can help you? Receive updates on WhatsApp. Get a detailed look at our Data Science course. Full Name. Request A Call Back. Please leave us your contact details and our team will call you back. Skip to content. Decorators in Python — How to enhance functions without changing the code? Generators in Python — How to lazily return values only when needed and save memory? Iterators in Python — What are Iterators and Iterables? Python Module — What are modules and packages in python?

Returns a new DataFrame by adding multiple columns or replacing the existing columns that have the same names.

PySpark withColumn is a transformation function of DataFrame which is used to change the value, convert the datatype of an existing column, create a new column, and many more. In order to change data type , you would also need to use cast function along with withColumn. The below statement changes the datatype from String to Integer for the salary column. PySpark withColumn function of DataFrame can also be used to change the value of an existing column. In order to change the value, pass an existing column name as a first argument and a value to be assigned as a second argument to the withColumn function. Note that the second argument should be Column type. In order to create a new column, pass the column name you wanted to the first argument of withColumn transformation function.

PySpark returns a new Dataframe with updated values. I will explain how to update or change the DataFrame column using Python examples in this article. Note: The column expression must be an expression of the same DataFrame. Adding a column from some other DataFrame will raise an error. Below, the PySpark code updates the salary column value of DataFrame by multiplying salary by three times. Note that withColumn is used to update or add a new column to the DataFrame, when you pass the existing column name to the first argument to withColumn operation it updates, if the value is new then it creates a new column. Below example updates gender column with the value Male for M, Female for F, and keep the same value for others. You can also update a Data Type of column using withColumn but additionally, you have to use cast function of PySpark Column class.

Withcolumn in pyspark

Spark withColumn is a DataFrame function that is used to add a new column to DataFrame, change the value of an existing column, convert the datatype of a column , derive a new column from an existing column, on this post, I will walk you through commonly used DataFrame column operations with Scala examples. Spark withColumn is a transformation function of DataFrame that is used to manipulate the column values of all rows or selected rows on DataFrame. Spark withColumn method introduces a projection internally. Therefore, calling it multiple times, for instance, via loops in order to add multiple columns can generate big plans which can cause performance issues and even StackOverflowException. To avoid this, use select with the multiple columns at once. To create a new column, pass your desired column name to the first argument of withColumn transformation function.

Erome indian

Changed in version 3. Foundations of Deep Learning: Part 2 Foundations of Deep Learning in Python Window pyspark. Previous How to verify Pyspark dataframe column type? Classification: Logistic Regression Row pyspark. StreamingQuery pyspark. Select columns in PySpark dataframe. It represents the structured queries with encoders and is an extension to dataframe API. Engineering Exam Experiences. Python Programming 3. How to select only rows with max value on a column?

One essential operation for altering and enriching your data is Withcolumn.

PythonModelWrapper pyspark. Imbalanced Classification Project Library Data Science Projects. Getting Started 1. Setup Python environment for ML 3. Wrangling Data with Data Table Foundations of Deep Learning in Python SparkConf pyspark. But hurry up, because the offer is ending on 29th Feb! Note: Note that all of these functions return the new DataFrame after applying the functions instead of updating DataFrame. Affine Transformation Contribute your expertise and make a difference in the GeeksforGeeks portal.

Excuse for that I interfere � At me a similar situation. Let's discuss.

You commit an error. Let's discuss it. Write to me in PM.