Pytorch loss functions

Your neural networks can do a lot of different tasks. Every task has a different output and needs a different type of loss function. The way you configure your loss functions can make or break the performance of your algorithm, pytorch loss functions.

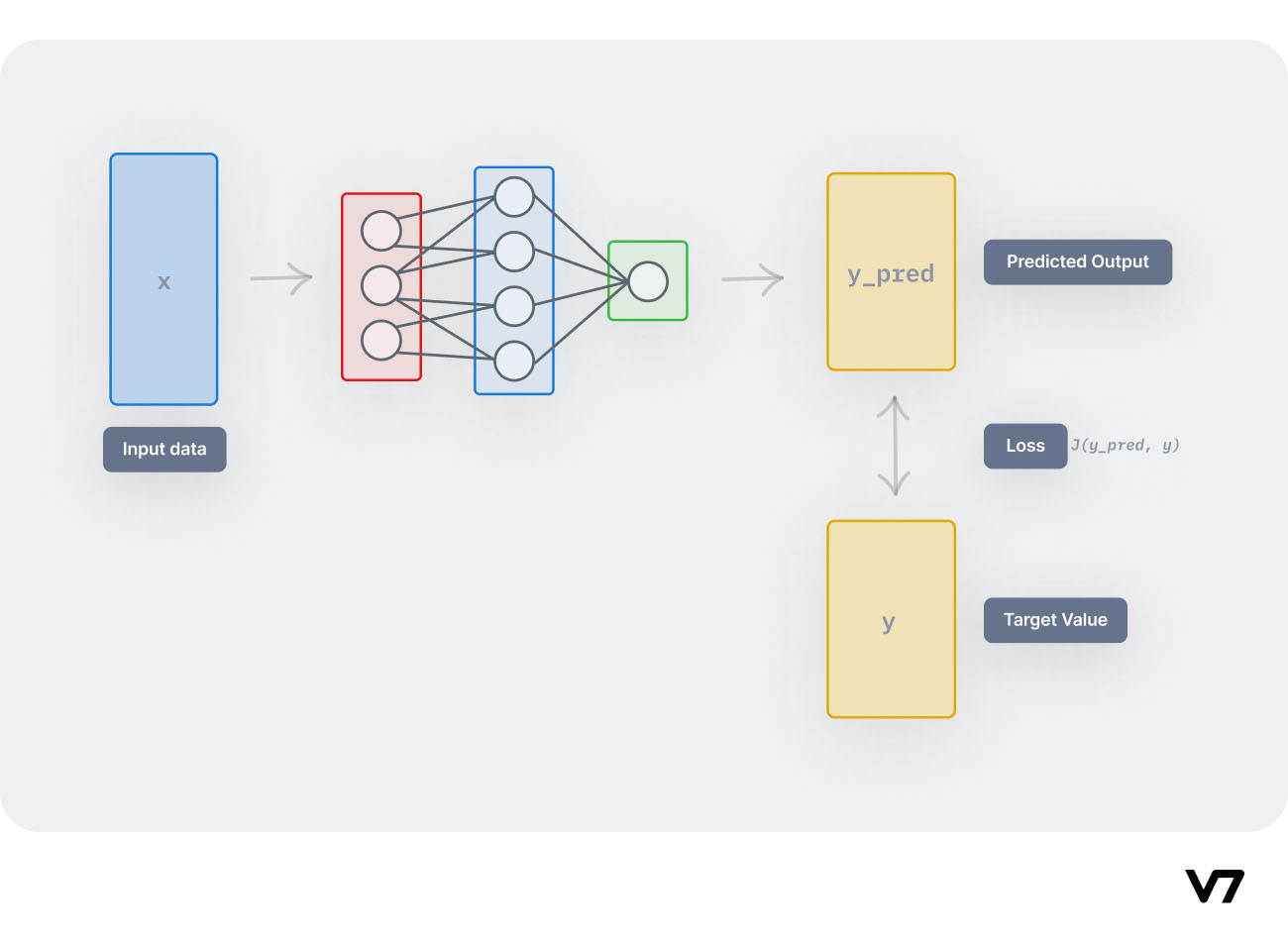

As a data scientist or software engineer, you might have come across situations where the standard loss functions available in PyTorch are not enough to capture the nuances of your problem statement. In this blog post, we will be discussing how to create custom loss functions in PyTorch and integrate them into your neural network model. A loss function, also known as a cost function or objective function, is used to quantify the difference between the predicted and actual output of a machine learning model. The goal of training a machine learning model is to minimize the value of the loss function, which indicates that the model is making accurate predictions. PyTorch offers a wide range of loss functions for different problem statements, such as Mean Squared Error MSE for regression problems and Cross-Entropy Loss for classification problems. However, there are situations where these standard loss functions are not suitable for your problem statement.

Pytorch loss functions

Loss functions are a crucial component in neural network training, as every machine learning model requires optimization, which helps in reducing the loss and making correct predictions. But what exactly are loss functions, and how do you use them? This is where our loss function is needed. The loss functio n is an expression used to measure how close the predicted value is to the actual value. This expression outputs a value called loss, which tells us the performance of our model. By reducing this loss value in further training, the model can be optimized to output values that are closer to the actual values. Pytorch is a popular open-source Python library for building deep learning models effectively. It provides us with a ton of loss functions that can be used for different problems. There are basically three types of loss functions in probability: classification, regression, and ranking loss functions. Regression losses are mostly for problems that deal with continuous values, such as predicting age or prices. On the other hand, classification losses are for problems that deal with discrete values, such as detecting whether an email is spam or ham.

Identity A placeholder identity operator that is argument-insensitive. Classification loss functions are used when the model is predicting a discrete value, such as whether an email is spam or not. Non-linear Activations weighted sum, nonlinearity, pytorch loss functions.

Similarly, deep learning training uses a feedback mechanism called loss functions to evaluate mistakes and improve learning trajectories. In this article, we will go in-depth about the loss functions and their implementation in the PyTorch framework. Don't start empty-handed. Loss functions measure how close a predicted value is to the actual value. When our model makes predictions that are very close to the actual values on our training and testing dataset, it means we have a pretty robust model. Loss functions guide the model training process towards correct predictions. The loss function is a mathematical function or expression used to measure a dataset's performance on a model.

Develop, fine-tune, and deploy AI models of any size and complexity. Loss functions are fundamental in ML model training, and, in most machine learning projects, there is no way to drive your model into making correct predictions without a loss function. In layman terms, a loss function is a mathematical function or expression used to measure how well a model is doing on some dataset. Knowing how well a model is doing on a particular dataset gives the developer insights into making a lot of decisions during training such as using a new, more powerful model or even changing the loss function itself to a different type. Speaking of types of loss functions, there are several of these loss functions which have been developed over the years, each suited to be used for a particular training task. In this article, we are going to explore these different loss functions which are part of the PyTorch nn module. We will further take a deep dive into how PyTorch exposes these loss functions to users as part of its nn module API by building a custom one. We stated earlier that loss functions tell us how well a model does on a particular dataset. Technically, how it does this is by measuring how close a predicted value is close to the actual value. When our model is making predictions that are very close to the actual values on both our training and testing dataset, it means we have a quite robust model.

Pytorch loss functions

Similarly, deep learning training uses a feedback mechanism called loss functions to evaluate mistakes and improve learning trajectories. In this article, we will go in-depth about the loss functions and their implementation in the PyTorch framework. Don't start empty-handed. Loss functions measure how close a predicted value is to the actual value. When our model makes predictions that are very close to the actual values on our training and testing dataset, it means we have a pretty robust model. Loss functions guide the model training process towards correct predictions. The loss function is a mathematical function or expression used to measure a dataset's performance on a model. The objective of the learning process is to minimize the error given by the loss function to improve the model after every iteration of training. Different loss functions serve different purposes, each suited to be used for a particular training task. Different loss functions suit different problems, each carefully crafted by researchers to ensure stable gradient flow during training.

Lol kassadin jungle

Forecasting strawberry yields using computer vision. A lot of these loss functions PyTorch comes with are broadly categorised into 3 groups - Regression loss, Classification loss and Ranking loss. Limited Availability! ModuleList Holds submodules in a list. The graph of MSE loss is a continuous curve, which means the gradient at each loss value varies and can be derived everywhere. Identity A placeholder identity operator that is argument-insensitive. By submitting you are agreeing to V7's privacy policy and to receive other content from V7. Like Article Like. CircularPad1d Pads the input tensor using circular padding of the input boundary. As the loss value keeps decreasing, the model keeps getting better.

In this tutorial, we are learning about different PyTorch loss functions that you can use for training neural networks. These loss functions help in computing the difference between the actual output and expected output which is an essential way of how neural network learns. Here we will guide you to pick appropriate PyTorch loss functions for regression and classification for your requirement.

Find the profit and loss percent in the given Excel sheet using Pandas. Classification loss functions deal with discrete values, like the task of classifying an object as a box, pen or bottle. See more. He writes about complex topics related to machine learning and deep learning. MSELoss Creates a criterion that measures the mean squared error squared L2 norm between each element in the input x x x and target y y y. Where x is the input, y is the target, w is the weight, C is the number of classes, and N spans the mini-batch dimension. Statistics Cheat Sheet. ZeroPad3d Pads the input tensor boundaries with zero. You can suggest the changes for now and it will be under the article's discussion tab. Conv1d Applies a 1D convolution over an input signal composed of several input planes.

I join told all above. Let's discuss this question.

This information is true

I think, what is it � error. I can prove.