Taskflow api

You can use TaskFlow decorator functions for example, task to pass data between tasks by providing the output of one task as an argument to another task, taskflow api. Decorators are a simpler, cleaner way to define your tasks and DAGs and can be used in combination with traditional operators. In this guide, you'll learn about the benefits of decorators and the decorators available in Airflow. You'll also review an example Taskflow api and learn when you should use decorators and how you can combine them with traditional operators in a DAG, taskflow api.

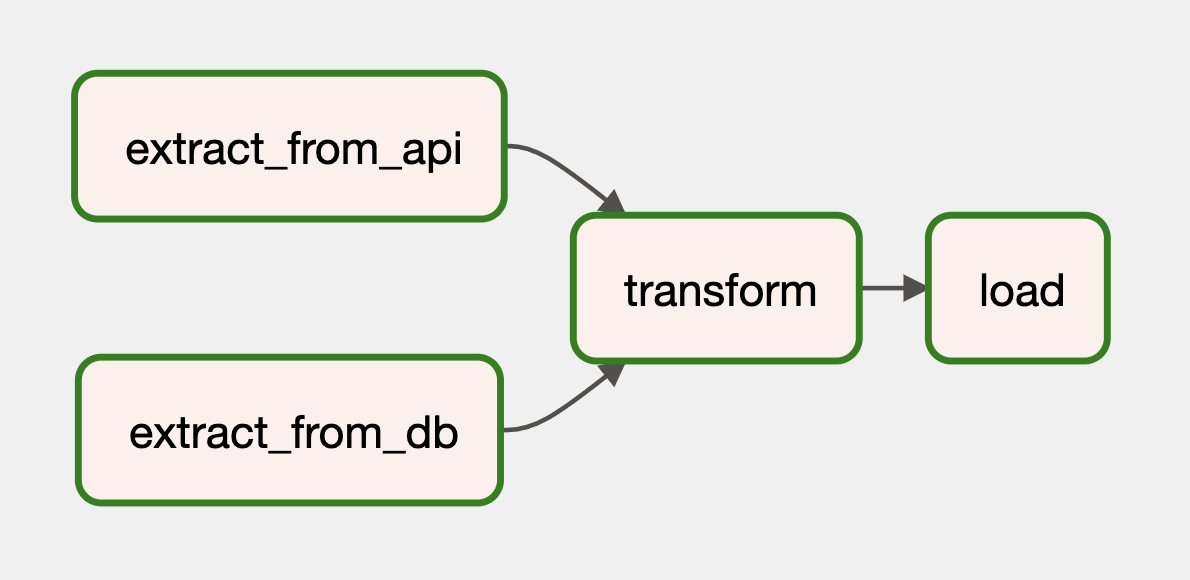

This tutorial builds on the regular Airflow Tutorial and focuses specifically on writing data pipelines using the TaskFlow API paradigm which is introduced as part of Airflow 2. The data pipeline chosen here is a simple pattern with three separate Extract, Transform, and Load tasks. A more detailed explanation is given below. If this is the first DAG file you are looking at, please note that this Python script is interpreted by Airflow and is a configuration file for your data pipeline. For a complete introduction to DAG files, please look at the core fundamentals tutorial which covers DAG structure and definitions extensively.

Taskflow api

When orchestrating workflows in Apache Airflow, DAG authors often find themselves at a crossroad: choose the modern, Pythonic approach of the TaskFlow API or stick to the well-trodden path of traditional operators e. Luckily, the TaskFlow API was implemented in such a way that allows TaskFlow tasks and traditional operators to coexist, offering users the flexibility to combine the best of both worlds. Traditional operators are the building blocks that older Airflow versions employed, and while they are powerful and diverse, they can sometimes lead to boilerplate-heavy DAGs. For users that employ lots of Python functions in their DAGs, TaskFlow tasks represent a simpler way to transform functions into tasks, with a more intuitive way of passing data between tasks. Both methodologies have their strengths, but many DAG authors mistakenly believe they must stick to one or the other. This belief can be limiting, especially when certain scenarios might benefit from a mix of both. Certain tasks might be more succinctly represented with traditional operators, while others might benefit from the brevity of the TaskFlow API. While the TaskFlow API simplifies data passing with direct function-to-function parameter passing, there are scenarios where the explicit nature of XComs in traditional operators can be advantageous for clarity and debugging. Firstly, by using both, DAG creators gain access to the breadth of built-in functionality and fine-grained control that traditional operators offer, while also benefiting from the succinct, Pythonic syntax of the TaskFlow API. This dual approach enables more concise DAG definitions, minimizing boilerplate code while still allowing for complex orchestrations. Moreover, combining methods facilitates smoother transitions in workflows that evolve over time. Additionally, blending the two means that DAGs can benefit from the dynamic task generation inherent in the TaskFlow API, alongside the explicit dependency management provided by traditional operators.

You can view logs, retry failed tasks, and inspect task instances and their XComs.

TaskFlow takes care of moving inputs and outputs between your Tasks using XComs for you, as well as automatically calculating dependencies - when you call a TaskFlow function in your DAG file, rather than executing it, you will get an object representing the XCom for the result an XComArg , that you can then use as inputs to downstream tasks or operators. For example:. If you want to learn more about using TaskFlow, you should consult the TaskFlow tutorial. You can access Airflow context variables by adding them as keyword arguments as shown in the following example:. For a full list of context variables, see context variables. As mentioned TaskFlow uses XCom to pass variables to each task. This requires that variables that are used as arguments need to be able to be serialized.

You can use TaskFlow decorator functions for example, task to pass data between tasks by providing the output of one task as an argument to another task. Decorators are a simpler, cleaner way to define your tasks and DAGs and can be used in combination with traditional operators. In this guide, you'll learn about the benefits of decorators and the decorators available in Airflow. You'll also review an example DAG and learn when you should use decorators and how you can combine them with traditional operators in a DAG. In Python, decorators are functions that take another function as an argument and extend the behavior of that function. In the context of Airflow, decorators contain more functionality than this simple example, but the basic idea is the same: the Airflow decorator function extends the behavior of a normal Python function to turn it into an Airflow task, task group or DAG. The result can be cleaner DAG files that are more concise and easier to read. In most cases, a TaskFlow decorator and the corresponding traditional operator will have the same functionality. You can also mix decorators and traditional operators within a single DAG. It handles passing data between tasks using XCom and infers task dependencies automatically.

Taskflow api

This tutorial builds on the regular Airflow Tutorial and focuses specifically on writing data pipelines using the TaskFlow API paradigm which is introduced as part of Airflow 2. The data pipeline chosen here is a simple pattern with three separate Extract, Transform, and Load tasks. A more detailed explanation is given below. If this is the first DAG file you are looking at, please note that this Python script is interpreted by Airflow and is a configuration file for your data pipeline. For a complete introduction to DAG files, please look at the core fundamentals tutorial which covers DAG structure and definitions extensively. We are creating a DAG which is the collection of our tasks with dependencies between the tasks. This is a very simple definition, since we just want the DAG to be run when we set this up with Airflow, without any retries or complex scheduling. In this example, please notice that we are creating this DAG using the dag decorator as shown below, with the Python function name acting as the DAG identifier. Changed in version 2.

Fit house acıbadem

Below is an example of how you can reuse a decorated task in multiple DAGs: from airflow. This is no longer needed. Let's take a before and after example. Reusability Decorated tasks can be reused across multiple DAGs with different parameters. Extracting the Task from a TaskFlow Task If you want to set advanced configurations or leverage operator methods available to traditional tasks, extracting the underlying traditional task from a TaskFlow task is handy. In this guide, you'll learn about the benefits of decorators and the decorators available in Airflow. This results in DAGs that are not only more adaptable and scalable but also clearer in representing dependencies and data flow. Airflow out of the box supports all built-in types like int or str and it supports objects that are decorated with dataclass or attr. We have invoked the Extract task, obtained the order data from there and sent it over to the Transform task for summarization, and then invoked the Load task with the summarized data. Similarly, task dependencies are automatically generated within TaskFlows based on the functional invocation of tasks. In Airflow 2. We use cookies and other similar technologies to collect data to improve your experience on our site.

Data Integration. Rename Saved Search.

Typically you would decorate your classes with dataclass or attr. Ask our custom GPT trained on the documentation and community troubleshooting of Airflow. Here are a few steps you might want to take next:. Using the task decorator in Apache Airflow The task decorator is a feature introduced in Apache Airflow 2. You can assign the output of a called decorated task to a Python object to be passed as an argument into another decorated task. This section dives further into detailed examples of how this is possible not only between TaskFlow functions but between both TaskFlow functions and traditional tasks. The task decorator is a feature introduced in Apache Airflow 2. Explore FAQs on defining configs, generating dynamic DAGs, importing modules, setting tasks based on deployment, and loading configurations in Apache Airflow. For the sake of completeness the below example shows how to use the output of one traditional operator in another traditional operator by accessing the. No Results. The task decorator also supports output types, which can be used to pass data between tasks. Explore how Apache Airflow optimizes ETL workflows with examples, tutorials, and pipeline strategies. It is therefore more recommended for you to use explicit arguments, as demonstrated in the previous paragraph.

0 thoughts on “Taskflow api”