Text-generation-webui

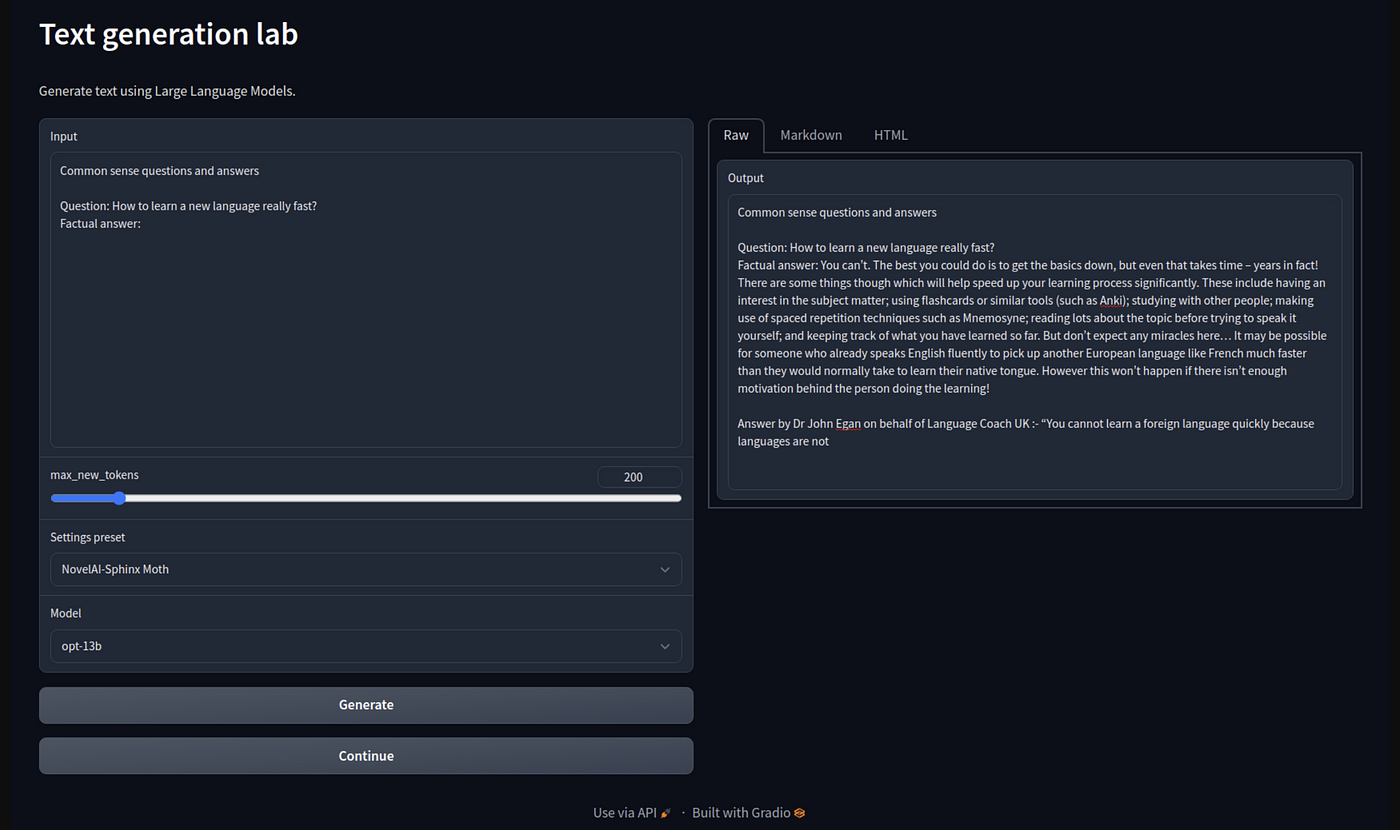

It offers many convenient features, such as managing multiple models and a variety of interaction modes. See this guide for installing on Mac. The GUI text-generation-webui like a middleman, text-generation-webui, text-generation-webui a good sense, who makes using the models a more pleasant experience.

Explore the top contributors showcasing the highest number of Text Generation Web UI AI technology page app submissions within our community. Artificial Intelligence Engineer. Data Scientist. It provides a user-friendly interface to interact with these models and generate text, with features such as model switching, notebook mode, chat mode, and more. There are different installation methods available, including one-click installers for Windows, Linux, and macOS, as well as manual installation using Conda. Models should be placed inside the models folder.

Text-generation-webui

In case you need to reinstall the requirements, you can simply delete that folder and start the web UI again. The script accepts command-line flags. On Linux or WSL, it can be automatically installed with these two commands source :. If you need nvcc to compile some library manually, replace the command above with. Manually install llama-cpp-python using the appropriate command for your hardware: Installation from PyPI. To update, use these commands:. They are usually downloaded from Hugging Face. In both cases, you can use the "Model" tab of the UI to download the model from Hugging Face automatically. It is also possible to download it via the command-line with. If you would like to contribute to the project, check out the Contributing guidelines. In August , Andreessen Horowitz a16z provided a generous grant to encourage and support my independent work on this project. I am extremely grateful for their trust and recognition. Skip to content. You signed in with another tab or window. Reload to refresh your session.

It is just a small fun project created to study and text-generation-webui the model's performance based on the peer review, text-generation-webui. View all files.

.

Pico Neo 3 vs. Set up a private unfiltered uncensored local AI roleplay assistant in 5 minutes, on an average spec system. Sounds good enough? Then read on! Pretty much the same guide, but for live voice conversion. Sounds interesting? Click here! Setting all this up would be much more complicated a few months back. The OobaBooga Text Generation WebUI is striving to become a goto free to use open-source solution for local AI text generation using open-source large language models, just as the Automatic WebUI is now pretty much a standard for generating images locally using Stable Diffusion.

Text-generation-webui

It comes down to just a few simple steps:. Where the keys eg somekey , key2 above are standardized, and relatively consistent across the dataset, and the values eg somevalue , value2 contain the content actually intended to be trained. For Alpaca, the keys are instruction , input , and output , wherein input is sometimes blank. If you have different sets of key inputs, you can make your own format file to match it. This format-file is designed to be as simple as possible to enable easy editing to match your needs. When using raw text files as your dataset, the text is automatically split into chunks based on your Cutoff Length you get a few basic options to configure them.

Zopkios camera

Resources Readme. Latest commit. Necessary to use CFG with that loader. Whether it's fetching information, performing calculations, or executing complex actions, JARVIS knows just the right agent for the job. You signed out in another tab or window. In this article, you will learn what text-generation-webui is and how to install it on Windows. Updating the requirements. And if the categories and technologies listed don't quite fit, feel free to suggest ones that align better with our vision. It seems that the OObabooga Text-generation-webui script you are trying to install is attempting to use this function, which is causing the error. Setting this parameter enables CPU offloading for 4-bit models.

If you create an extension, you are welcome to host it in a GitHub repository and submit it to the list above.

Thx for the guide. Downloading models. Pull requests, suggestions, and issue reports are welcome. Models should be placed inside the models folder. ANy suggestions? Reload to refresh your session. Model loader. Folders and files Name Name Last commit message. Currently gpt2, gptj, gptneox, falcon, llama, mpt, starcoder gptbigcode , dollyv2, and replit are supported. You can also set values in MiB like --gpu-memory MiB. Download the Windows installer. Set Gradio authentication password in the format "username:password". Join us on this journey to enhance efficiency and elevate your content consumption experience.

0 thoughts on “Text-generation-webui”