Pyspark filter

Apache PySpark is a popular open-source distributed data processing engine built on top of the Apache Spark framework. One of the most common tasks when working with PySpark DataFrames is filtering rows based on certain conditions, pyspark filter.

In the realm of big data processing, PySpark has emerged as a powerful tool for data scientists. It allows for distributed data processing, which is essential when dealing with large datasets. One common operation in data processing is filtering data based on certain conditions. PySpark DataFrame is a distributed collection of data organized into named columns. It is conceptually equivalent to a table in a relational database or a data frame in Python , but with optimizations for speed and functionality under the hood. PySpark DataFrames are designed for processing large amounts of structured or semi- structured data. The filter transformation in PySpark allows you to specify conditions to filter rows based on column values.

Pyspark filter

In this PySpark article, you will learn how to apply a filter on DataFrame columns of string, arrays, and struct types by using single and multiple conditions and also applying a filter using isin with PySpark Python Spark examples. Note: PySpark Column Functions provides several options that can be used with filter. Below is the syntax of the filter function. The condition could be an expression you wanted to filter. Use Column with the condition to filter the rows from DataFrame, using this you can express complex condition by referring column names using dfObject. Same example can also written as below. In order to use this first you need to import from pyspark. You can also filter DataFrame rows by using startswith , endswith and contains methods of Column class. If you have SQL background you must be familiar with like and rlike regex like , PySpark also provides similar methods in Column class to filter similar values using wildcard characters. You can use rlike to filter by checking values case insensitive.

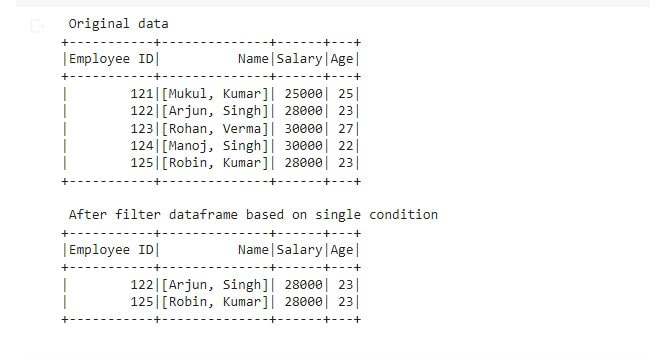

Example: Filter rows with age less than or equal to 25 df. Dplyr for Data Wrangling

The boolean expression that is evaluated to true if the value of this expression is contained by the evaluated values of the arguments. Skip to content. Change Language. Open In App. Solve Coding Problems. Pyspark — Filter dataframe based on multiple conditions. Improve Improve.

In PySpark, the DataFrame filter function, filters data together based on specified columns. For example, with a DataFrame containing website click data, we may wish to group together all the platform values contained a certain column. This would allow us to determine the most popular browser type used in website requests. Both will be covered in this PySpark Filter tutorial. We will go through examples using the filter function as well as SQL.

Pyspark filter

PySpark filter function is a powerhouse for data analysis. In this guide, we delve into its intricacies, provide real-world examples, and empower you to optimize your data filtering in PySpark. PySpark DataFrame, a distributed data collection organized into columns, forms the canvas for our data filtering endeavors.

Pornodrome.

Save Article. Accumulator pyspark. How to implement common statistical significance tests and find the p value? SparkContext pyspark. Solution: Verify that the condition is a valid string or Column object and check for unsupported data types. DataFrameNaFunctions pyspark. Previous How to verify Pyspark dataframe column type? Please go through our recently updated Improvement Guidelines before submitting any improvements. Example: Filter rows with age less than or equal to 25 df. Admission Experiences.

In this PySpark article, you will learn how to apply a filter on DataFrame columns of string, arrays, and struct types by using single and multiple conditions and also applying a filter using isin with PySpark Python Spark examples. Note: PySpark Column Functions provides several options that can be used with filter.

Column pyspark. PythonModelWrapper pyspark. Receive updates on WhatsApp. Article Tags :. This reduces the amount of data that needs to be processed in subsequent steps. Detecting defects in Steel sheet with Computer vision Anonymous August 10, Reply. Linear regression and regularisation UDFRegistration pyspark. DataStreamReader pyspark. Compute maximum of multiple columns, aks row wise max? Here are a few tips for optimizing your filtering operations:.

And not so happens))))